Today, we are excited to announce that DocumentDB: API for MongoDB is generally available. The API for MongoDB allows developers to experience the power of the DocumentDB database engine with the comfort of a managed service and the familiarity of the MongoDB SDKs and tools. With the announcement of its general availability, we are introducing a suite of new features for improvements in availability, scalability, and usability of the service.

What is API for MongoDB?

DocumentDB: API for MongoDB is a flavor of DocumentDB that enables MongoDB developers to use familiar SDKs, tool chains, and libraries to develop against DocumentDB. MongoDB developers can now enjoy the advantages of DocumentDB, which include auto-indexing, no server management, limitless scale, enterprise-grade availability backed by service level agreements (SLAs), and enterprise-grade customer support.

What’s new?

From preview to general availability, we have reached a few important milestones. We are proud to introduce a number of major feature releases:

- Sharded Collections

- Global Databases

- Read-only Keys

- Additional portal metrics

Sharded Collections– By specifying a shard key, API for MongoDB will automatically distribute your data amongst multiple partitions to scale out both storage and throughput. Sharded collections are an excellent option for applications to ingest large volumes of data or for applications that require high throughput, low latency access to date. Sharded collections can be scaled in a matter of seconds in the Azure portal. They can scale to a nearly limitless amount of both storage and throughput.

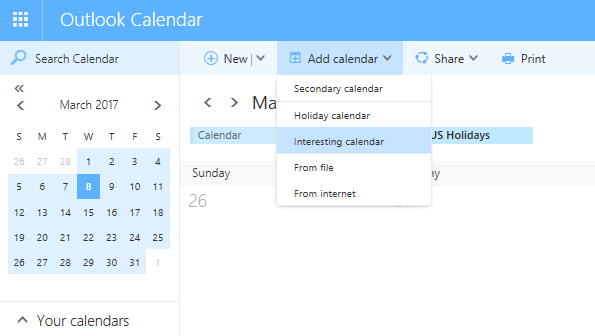

Global Databases– API for MongoDB now allows you to replicate your data across multiple regions to deliver high availability. You can replicate your data across any of Azure’s 30+ datacenters with just a few clicks from the Azure portal. Global databases are a great option for delivering low latency requests across the world or in preparation for disaster recovery (DR) scenarios. Global databases have support for both manual and policy driven failovers for full user control.

Read-only Keys– API for MongoDB now supports read-only keys, which will only allow read operations on the API for MongoDB database.

Portal Metrics– To improve visibility into the database, we are proud to announce that we have added additional metrics to the Azure portal. For all API for MongoDB databases, we provide metrics on the numbers of requests, request charges, and errored requests. Supplementing the portal metrics, we have also added a custom command, GetLastRequestStatistics, which allows you to programmatically determine a command’s request charge.

What’s next?

General availability is just the beginning for all the features and improvements we have in stored for DocumentDB: API for MongoDB. In the near future, we will be releasing support for Unique indexes and a couple major performance improvements. Stay tuned!

In addition to API for MongoDB’s general availability, we are announcing a preview Spark connector. Visit our Github repo for more information.

We hope you take advantage of these new features and capabilities. Please continue to provide feedback on what you want to see next. Try out DocumentDB: API for MongoDB today by signing up for a free trial and create a API for MongoDB account.

Stay up-to-date on the latest Azure DocumentDB news and features by following us on Twitter @DocumentDB.