Today our team is happy to present our latest release with TypeScript 2.2!

For those who haven’t yet heard of it, TypeScript is a simple extension to JavaScript to add optional types along with all the new ECMAScript features. TypeScript builds on the ECMAScript standard and adds type-checking to make you way more productive through cleaner code and stronger tooling. Your TypeScript code then gets transformed into clean, runnable JavaScript that even older browsers can run.

While there are a variety of ways to get TypeScript set up locally in your project, the easiest way to get started is to try it out on our site or just install it from npm:

npm install -g typescript

If you’re a Visual Studio 2015 user with update 3, you can install TypeScript 2.2 from here. You can also grab this release through NuGet. Support in Visual Studio 2017 will come in a future update.

If you’d rather not wait for TypeScript 2.2 support by default, you can configure Visual Studio Code and our Sublime Text plugin to pick up whatever version you need.

As usual, we’ve written up about new features on our what’s new page, but we’d like to highlight a couple of them.

More quick fixes

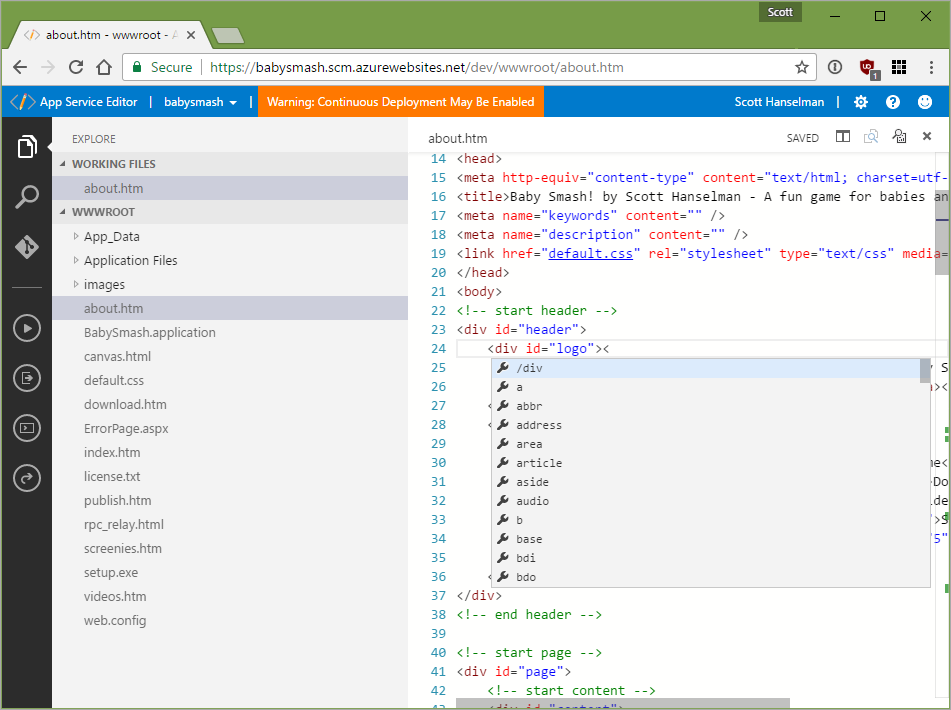

One of the areas we focus on in TypeScript is its tooling – tooling can be leveraged in any editor with a plugin system. This is one of the things that makes the TypeScript experience so powerful.

With TypeScript 2.2, we’re bringing even more goodness to your editor. This release introduces some more useful quick fixes (also called code actions) which can guide you in fixing up pesky errors. This includes

- Adding missing imports

- Adding missing properties

- Adding forgotten

this. to variables - Removing unused declarations

- Implementing abstract members

With just a few of these, TypeScript practically writes your code for you.

As you write up your code, TypeScript can give suggestions each step of the way to help out with your errors.

Expect similar features in the future. The TypeScript team is committed to ensuring that the JavaScript and TypeScript community gets the best tooling we can deliver.

With that in mind, we also want to invite the community to take part in this process. We’ve seen that code actions can really delight users, and we’re very open to suggestions, feedback, and contributions in this area.

The object type

The object type is a new type in 2.2 that matches any types except for primitive types. In other words, you can assign anything to the object type except for boolean, number, string, null, undefined, and symbol.

object is distinct from the {} type and Object types in this respect due to structural compatibility. Because the empty object type ({}) also matches primitives, it couldn’t model APIs like Object.create which truly only expect objects – not primitives. object on the other hand does well here in that it can properly reject being assigned a number.

We’d like to extend our thanks to members of our community who proposed and implemented the feature, including François de Campredon and Herrington Darkholme.

Easier string indexing behavior

TypeScript has a concept called index signatures. Index signatures are part of a type, and tell the type system what the result of an element access should be. For instance, in the following:

interfaceFoo {// Here is a string index signature:

[prop:string]:boolean;

}declareconst x:Foo;const y =x["hello"];Foo has a string index signature that says “whenever indexing with a string, the output type is a boolean.” The core idea is that index signatures here are meant to model the way that objects often serve as maps/dictionaries in JavaScript.

Before TypeScript 2.2, writing something like x["propName"] was the only way you could make use of a string index signature to grab a property. A little surprisingly, writing a property access like x.propName wasn’t allowed. This is slightly at odds with the way JavaScript actually works since x.propName is semantically the same as x["propName"]. There’s a reasonable argument to allow both forms when an index signature is present.

In TypeScript 2.2, we’re doing just that and relaxing the old restriction. What this means is that things like testing properties on a JSON object has become dramatically more ergonomic.

interfaceConfig {

[prop:string]:boolean;

}declareconst options:Config;// Used to be an error, now allowed!if (options.debugMode) {// ...

}Better class support for mixins

We’ve always meant for TypeScript to support the JavaScript patterns you use no matter what style, library, or framework you prefer. Part of meeting that goal involves having TypeScript more deeply understand code as it’s written today. With TypeScript 2.2, we’ve worked to make the language understand the mixin pattern.

We made a few changes that involved loosening some restrictions on classes, as well as adjusting the behavior of how intersection types operate. Together, these adjustments actually allow users to express mixin-style classes in ES2015, where a class can extend anything that constructs some object type. This can be used to bridge ES2015 classes with APIs like Ember.extend.

As an example of such a class, we can write the following:

typeConstructable=new (...args:any[]) =>object;functionTimestamped<BCextendsConstructable>(Base:BC) {returnclassextendsBase {private _timestamp =newDate();get timestamp() {return_timestamp;

}

};

}and dynamically create classes

classPoint {

x:number;

y:number;constructor(x:number, y:number) {this.x=x;this.y=y;

}

}constTimestampedPoint=Timestamped(Point);and even extend from those classes

classSpecialPointextendsTimestamped(Point) {

z:number;constructor(x:number, y:number, z:number) {super(x, y);this.z=z;

}

}let p =newSpecialPoint(1, 2, 3);// 'x', 'y', 'z', and 'timestamp' are all valid properties.let v =p.x+p.y+p.z;p.timestamp.getMilliseconds()The react-native JSX emit mode

In addition to the preserve and react options for JSX, TypeScript now introduces the react-native emit mode. This mode is like a combination of the two, in that it emits to .js files (like --jsx react), but leaves JSX syntax alone (like --jsx preserve).

This new mode reflects React Native’s behavior, which expects all input files to be .js files. It’s also useful for cases where you want to just leave your JSX syntax alone but get .js files out from TypeScript.

Support for new.target

With TypeScript 2.2, we’ve implemented ECMAScript’s new.target meta-property. new.target is an ES2015 feature that lets constructors figure out if a subclass is being constructed. This feature can be handy since ES2015 doesn’t allow constructors to access this before calling super().

What’s next?

Our team is always looking forward, and is now hard at work on TypeScript 2.3. While our team’s roadmap should give you an idea of what’s to come, we’re excited for our next release, where we’re looking to deliver

- default types for generics

- async iterator support

- downlevel generator support

Of course, that’s only a preview for now.

We hope TypeScript 2.2 makes you even more productive, and allows you to be even more expressive in your code. Thanks for taking the time to read through, and as always, happy hacking!