New APIs available for custom visuals developers

Power BI reports in SQL Server Reporting Services: January 2017 Technical Preview now available

Happy new year! In our previous post before the holidays, we mentioned we were working toward releasing the next Technical Preview of Power BI reports in SQL Server Reporting Services in January. Today, we’re pleased to make the January 2017 Technical Preview available for download.

Try it on your on-premises server

We released the October 2016 Technical Preview as a pre-configured Virtual Machine in the Azure Marketplace. We received a lot of enthusiastic feedback that this VM with everything you need preloaded – Power BI Desktop, Reporting Services, Analysis Services, and even some sample data and reports – made it really quick and easy to try this exciting new feature.

With the January 2017 Technical Preview, in addition to trying the VM in Azure, you can now download the software and install it on your own on-premises VM or server. In the latter case, you’ll need

- Your own VM or server (not one in production use)

- Windows Server 2012 or later (or Windows 8 or later)

- .NET Framework 4.5.2 or later

- SQL Server Database Engine (2008 or later), to store the report server database

- SQL Server Analysis Services (2012 SP1 CU4 or later), to store your data models

Need a copy of SQL Server to try? Get SQL Server 2016 Evaluation or try SQL Server vNext CTP1.1.

Download and install in minutes

We’ve learned a lot about how customers install and upgrade Reporting Services and we’re incorporating that feedback into a standalone setup for the January 2017 Technical Preview. This standalone installer offers a lightweight download and a simplified setup process. It’s a work in progress and you’ll notice it evolve in an upcoming preview, but already, you can install the Technical Preview in just a few minutes. Plus, installing the Technical Preview won’t affect any Database Engine instances or other SQL Server features.

In tomorrow’s post, Chris Finlan will guide you through the setup process step-by-step.

Create reports with Power BI Desktop

Along with the new setup, you’ll find a Technical Preview version of Power BI Desktop for download (in 32-bit and 64-bit flavors). With it, you can

- Connect “live” to Analysis Services models – both Tabular and Multidimensional (cubes)

- Visually explore data and create an interactive report

- Save that report to your report server running the Technical Preview

- View and interact with the report in your web browser

You do need to use this Technical Preview version of Power BI Desktop. If you already have another version of Power BI Desktop installed, you can install this one side-by-side.

More to come

We’re hard at work on enhancing the setup experience and Power BI report functionality for upcoming releases. In the meantime, we hope you enjoy trying the January 2017 Technical Preview and we’re eager to hear your feedback.

Try it now and send us your feedback

- Download the January 2017 Technical Preview

- Try the Virtual Machine in the Azure Marketplace

- Post in the Reporting Services forum (or if you prefer, send us an email)

- Join the conversation on Twitter: @SQLServerBI, #SSRS

Turn on that Scary DC

Stephen Mathews here; now tell me, do you have a domain controller you’re afraid to turn on? Maybe it lost power and nobody noticed for a couple months or you don’t trust your AD restore procedures; perhaps a troubled DC keeps deleting your DNS records – whatever happened, I’m going to show you how to power it on without allowing it to replicate.

The end goal is to disable replication on the troubled DC. This is done very simply by using the repadmin commands:

repadmin /options +DISABLE_INBOUND_REPL

repadmin /options +DISABLE_OUTBOUND_REPL

To disable replication on the troubled machine you must have either local or remote access. The local commands are above, to disable replication remotely use the same commands and add the server name. However, the server must be reachable via the network – the same network that replication runs over. You could try hammering the troubled DC with the commands during its startup and you may block replication before the DC starts, but let’s go with a more proactive method. We’ll block replication on its partners first.

The first step to finding the DC’s replication partners is to check the Connections tab inside NTDS Settings within Active Directory Sites and Services. In the below example my 2 “DFS” servers are indeed Domain Controllers, with SNY-DFS-VM02 is my troubled DC that needs replication blocked.

We have two connection fields: “Replicate From” and “Replicate To”; we also have two ways to block replication: Inbound and Outbound. We’re going to disable Outbound replication on the “Replicate From” servers and disable Inbound replication on the “Replicate To” servers. We can do this remotely using repadmin:

repadmin /options

repadmin /options

You can see this in the below screenshot that the “Current DSA Options” grow from 1 option “IS_GC” to 3 options “IS_GC DISABLE_INBOUND_REPL DISABLE_OUTBOUND_REPL”

Now that all the partners have their replication blocked, we can turn on the troubled DC and block its replication the same way. Once you’ve confirmed the troubled DC is blocked, go ahead and reverse your changes for the partners.

We’ll do one final check to verify the troubled DC is not replicating. Voila, it reports that “The destination server is currently rejecting replication requests.”

After walking you through the GUI, I’ll share with you how I did it with PowerShell. I use Get- ADReplicationConnection to check all the Replication Connections for any that match the troubled DC in the ReplicateFromDirectoryServer or ReplicateToDirectoryServer properties. Once I have those, I do some string parsing to pull out the server names. Then I write the repadmin command for both the source and target servers and store them in an array. After I get all the commands, I need to move the troubled DC repadmin commands to the end of the array (no easy way there, I ended up creating 3 arrays). Finally, I execute the commands with the output in the below screenshot. The script waits for the troubled DC to become reachable – this is when you turn on the troubled DC. Once it blocks the replication on the troubled DC, it reverses the changes on its replication partners.

$DCtoBlock = “sny-dfs-vm02″

$Commands = @()

Get-ADReplicationConnection -Filter * |

Where-Object {

($_.ReplicateFromDirectoryServer -match $DCtoBlock) -or

($_.ReplicateToDirectoryServer -match $DCtoBlock)

} |

ForEach-Object {

$Source = $_.ReplicateFromDirectoryServer.Split(‘,’)[1].Replace(‘CN=’,””)

$Target = $_.ReplicateToDirectoryServer.Split(‘,’)[0].Replace(‘CN=’,””)

$Commands += “repadmin /options $Source DISABLE_OUTBOUND_REPL

$Commands += “repadmin /options $Target DISABLE_INBOUND_REPL”

}

$Commands = $Commands | Select-Object -Unique | Sort-Object

$TailCmds = $Commands | Select-String -Pattern $DCtoBlock

$Commands = $Commands | Select-String -Pattern $DCtoBlock -NotMatch

$Commands += $TailCmds

foreach ($Action in @{‘+’=$true;’-‘=$null}.GetEnumerator()) {

foreach ($Command in $Commands) {

$Option = $Command.ToString().Split()[-1]

$CmdString = $Command.ToString().Replace($Option,”$($Action.Name)$Option”)

If (!(($CmdString -match $DCtoBlock) -and ($Action.Name -match ‘-‘))) {

do {

$CmdString

Invoke-Expression -Command $CmdString | Out-Null

$RepAdminOptions = Invoke-Expression -Command $Command.ToString().Replace($Option,””)

} while (($RepAdminOptions | Select-String -Pattern $Option -Quiet) -ne $Action.Value)

}

}

}

Thanks for reading and let’s keep those DCs on eh?

Three FREE Training Courses on ASP.NET Core from Microsoft Virtual Academy

This time last year we did a Microsoft Virtual Academy class on what was then called "ASP.NET 5." It made sense to call it 5 since 5 > 4.6, right? But since then ASP.NET 5 has become .NET Core 1.0 and ASP.NET Core 1.0. It's 1.0 because it's smaller, newer, and different. As the .NET "full" framework marches on, on Windows, .NET Core is cross-platform and for the cloud.

Command line concepts like dnx, dnu, and dnvm have been unified into a single "dotnet" driver. You can download .NET Core at http://dot.net and along with http://code.visualstudio.com you can get a web site up and running in 10 minutes on Windows, Mac, or many flavors of Linux.

So, we've decided to update and refresh our Microsoft Virtual Academy. In fact, we've done three days of training. Introduction, Intermediate, and Cross-Platform and all three days are now available! We just released training for ASP.NET Core 1.0 Cross-Platform that shows Mac, Ubuntu, and Docker!

Head over to Microsoft Virtual Academy and watch our new, free "Introduction to ASP.NET Core 1.0." It's a great relaxed pace if you've been out of the game for a bit, or you're a seasoned .NET "Full" developer who has avoided learning .NET Core thus far. If you don't know the C# language yet, check out our online C# tutorial first, then watch the video.

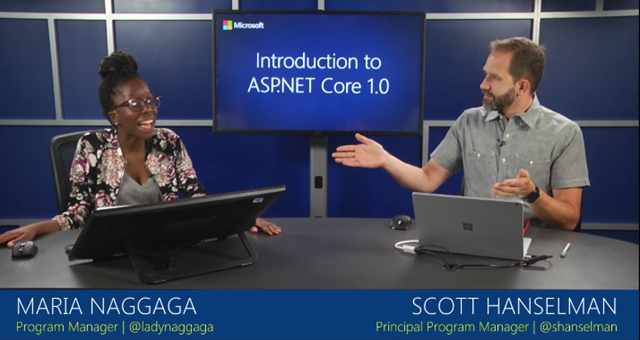

Introduction to ASP.NET Core 1.0

Join experts Scott Hanselman and Maria Naggaga, and find out how to build .NET Core applications on any operating system. Bring your web development expertise and roll up your sleeves, for this first in a three-part series.

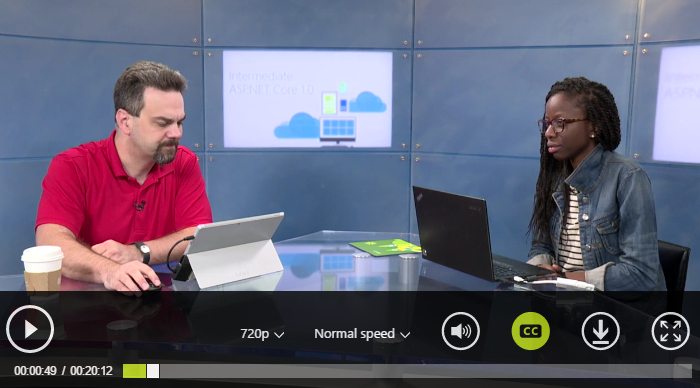

Intermediate ASP.NET Core 1.0

Want a deeper dive into ASP.NET Core 1.0? Build on what you learned in Introduction to ASP.NET Core 1.0, and explore this new technology even further, as Scott Hanselman, Jeff Fritz, and Rowan Miller offer an in-depth, intermediate-level look at ASP.NET Core 1.0.

ASP.NET Core 1.0 Cross-Platform

Ready to build and deploy ASP.NET Core 1.0 apps? Join experts Scott Hanselman, Maria Naggaga, and Glenn Condron, and see how to do just that using Mac and Linux. Revisit content from the Introduction to ASP.NET Core 1.0 course, but using a Mac and Linux.

Do us a favor when you watch these, rate them (5 stars!) and SHARE them on your social networks.

NOTE: There's a LOT of quality free courseware for learning .NET Core and ASP.NET Core. We've put the best at http://asp.net/free-courses and I encourage you to check them out!

Hope you have as much fun with these courses as we had creating them!

Sponsor: Do you deploy the same application multiple times for each of your end customers? The team at Octopus have taken the pain out of multi-tenant deployments. Check out their latest 3.4 release

© 2016 Scott Hanselman. All rights reserved.

Announcing “UWPDesktop” NuGet Package Version 14393

The UWPDesktop NuGet package is here to improve the developer experience with Visual Studio when walking along the Desktop Bridge.

The Desktop Bridge allows you to call UWP APIs directly from your WinForms, WPF or VB application. You can use APIs such as Live tiles, notifications, App Services and many more!

Previously, calling UWP APIs from a converted app was a confusing process. You had to find and reference the right .dll or .winmd, and it often wasn’t obvious which one to choose.

For example, to use “await” on UWP types, you had to reference the System.Runtime.WindowsRuntime.dll (c:\Program Files (x86)\Reference Assemblies\Microsoft\Framework\.NETCore\v4.5\

System.Runtime.WindowsRuntime.dll), which was actually from Windows 8.1 and therefore only worked in conjunction with “facade\windows.winmd” (c:\Program Files (x86)\Windows Kits\10\UnionMetadata\Facade\Windows.WinMD).

AppServiceConnectionStatus status = await connection.OpenAsync();

Confusing, right? The new way is much simpler – just include the latest UWPDesktop NuGet package to your project, and you’re ready to call any supported UWP API without needing additional references.

You have two options for installing the package:

- In Visual Studio, right-click on your project, select “Manage NuGet Packages,” and search for and install the UWPDesktop package (as shown in the screenshot below):

![]()

- Use the Package Manager Console

Install-Package UwpDesktop

Warnings for unsupported UWP APIs

Not all modern APIs can be called directly from your desktop application; for example, you still can’t use XAML or SecondaryTile in your WinForms app. The UWPDesktop NuGet package makes your life easier by raising a warning if you try and call an unsupported API.

For more information and to learn more about the UWPDesktop NuGet package and Desktop Bridge, check out the resources below.

Resources

The post Announcing “UWPDesktop” NuGet Package Version 14393 appeared first on Building Apps for Windows.

Announcing the availability of Clear Linux* OS in Azure Marketplace

Today, we’re excited to announce the availability of Clear Linux* OS for Intel® Architecture in Azure Marketplace. Clear Linux OS is a free, open source Linux distribution built from the ground up for cloud and data center environments and tuned to maximize the performance and value of Intel architecture.

Microsoft Azure is the first public cloud provider to offer Clear Linux, and we’re really excited about what it means for Linux users in the cloud and the community at large. Here’s a little bit more about the Clear Linux offerings in Azure Marketplace that we are announcing today:

- A bare-bones VM, intended to serve as a starting point for those wanting to explore and build out a system with bundles of their choosing

- A container image that includes the popular Docker container runtime, and,

- A sample solution image for developing machine learning applications, pre-loaded with popular open source tools

In addition to the performance features of Clear Linux, we believe that DevOps teams will benefit from the stateless capabilities of Clear Linux in Azure. By separating the system defaults and distribution best practices from the user configuration, Clear Linux simplifies maintenance and deployment which becomes very important as infrastructure scales. This also pairs well with bundles, a powerful way of distributing software that allows for scenarios like this system update with new kernel and reboot in just a few seconds:

The availability of Clear Linux in Azure Marketplace adds more open source options to the portfolio of teams and organizations that are looking to accelerate business value and increase agility in the cloud, and it’s a testament to our continued focus on great experiences for Linux users in the hybrid cloud.

It also highlights the openness and flexibility of Azure, enabling teams to choose from a wide array of Linux and open source solutions from Marketplace, partner offerings or their own portfolio, and adding value with a rich and powerful set of cloud services, and is an important milestone of the broad collaboration between Microsoft and Intel as we worked together throughout 2016 to bring the Azure Linux Agent to Clear Linux, and collaborated in kernel and system improvements focusing on improving boot times and performance.

“Our team is delighted to have worked with Intel since day one on this project, and to bring Clear Linux to Azure Marketplace and our customers as a result of that collaboration”, said KY Srinivasan from the Enterprise Open Source Team at Microsoft.

Get started with Clear Linux in Azure today by deploying any of the images in Azure Marketplace, and checking out the Documentation on the Clear Linux site. Don’t have an Azure subscription yet? Get started with a free 30-day trial!

Collaboration and federation: Azure Service Bus Messaging on-premises futures

Azure Service Bus Messaging is the one of the most powerful message brokers with the deepest feature set available anywhere in public cloud infrastructure today. The global Azure Service Bus broker infrastructure, available in all global Azure regions and the Azure Government cloud, processes nearly 500 Billion message transactions per month. Each cluster in these regions is backed by as many as hundreds of compute cores, Terabytes of memory, and Petabytes of backing storage capacity, far exceeding the cluster deployment scale of any commercial or open source broker you could acquire and run.

As a fully transactional broker that builds on the ISO/IEC standard AMQP 1.0 protocol, Service Bus provides a robust foundation for commercial and financial workloads.It provides strong assurances on message delivery and message retention, with SLA-backed, and sustainably achieved availability and reliability rates unmatched in the industry at its functional depth and scale. The Azure Premium Messaging tier provides performance predictability and further enhanced reliability by exclusively reserving processing resources on a per customer basis inside an environment that provides all the management and cost advantages of cloud scale.

We’re confident to state that Azure Service Bus, delivered from the nearest Azure datacenter over redundant network connectivity, is a choice far superior in terms of cost and reliability to most on-premises messaging cluster installations, even if the core workloads run and remain in an on-premises environment.

Hybrid is the future

The future of hybrid cloud computing in Azure is twofold. First, we provide world-class services and capabilities with open protocols that can be composed with and leveraged by on-premises services run anywhere. Second, we license the software backing these services for on-premises delivery on top of Azure Stack.

This strategy is also guiding the future for Azure Service Bus Messaging and all other capabilities delivered by the Messaging team, which includes Azure Event Hubs and Azure Relay.

As a consequence, we are announcing today that we will not provide an immediate successor for the standalone Service Bus for the Windows Server 1.1 product. Service Bus for Windows Server 1.1 was shipped as a free download that could be installed inside and outside of the Azure Stack precursor Azure Pack. The product is available as a free download and will go out of mainstream support on January 9, 2018.

While we are continuing to significantly strengthening the commitment to deliver Service Bus, as well as our other messaging technologies, on top of the packaged on-premises Azure technology stack, we will no longer deliver a Windows Server or Windows Client installable message broker outside of that context.

We have come to this conclusion and decision after a careful analysis of market and community needs, trends, and considering what our true technology strengths are.

After decades of monoculture, there has been a “cambric explosion” in messaging platforms. There are many kinds of brokers and messaging libraries that fill many niches. We believe that the breadth of choice customers now have for running messaging middleware on singular special purpose devices, in communication gateways and the fog, on factory floors, in retail stores, in bank branches, inside building control systems, or inside a delivery truck or a container vessel is very, very exciting.

Microsoft Azure’s strengths lay in building and running robust cloud-scale systems that deal with high-volume, high-velocity, consolidated message flows in and through the cloud, via Azure Service Bus Messaging, Azure Relay, and Azure Event Hubs. We believe that “hybrid” also means collaboration and integration to create a “better together” story of a healthy messaging platform ecosystem that fills all the niches across IT and IoT, and that leverages public cloud as a backplane and integration surface.

Microsoft therefore continues to invest in advancing the AMQP and MQTT protocols in OASIS and working with organizations, such as the OPC Foundation, in vertical industries to establish a solid set of choices for messaging protocol standards. Based on that standards foundation, we are looking forward to collaborating with many vendors and communities to build specialized messaging infrastructure, creating federation bridges and integration into and through Azure and Azure Stack. The timeline for availability of our services on Azure Stack will be announced at a future date.

Seven Steps for Using Data and Power BI to be Productive in Telling a Data Story

Failure Anomalies alert now detects dependency failures

We’ve upgraded Smart Detection – Failure Anomalies so that it monitors your web app’s outgoing dependencies and AJAX calls as well as incoming server requests. If you’re monitoring your app with Application Insights, you’ll be notified within minutes if there’s a sudden disruption or degradation in your app’s performance.

Provided your app has a certain volume of traffic, Smart Detection – Failure Anomalies configures itself. It learns your app’s usual background level of failures. It triggers an alert if the rate of failures goes above the learned pattern. The diagnostic information in the alert can help you fix the problem before most users are aware of it.

Until recently, Smart Detection monitored only failed incoming requests. (Although you can manually set alerts on a wide variety of metrics.) Now, it also monitors the failure rate of dependency calls – that is, calls that your app makes to external services such as REST APIs or SQL databases. This includes both server-side calls, and AJAX calls from the client side of your app.

Here’s a sample of an alert you might get:

This upgrade improves the chances of your finding a fault quickly, especially if it’s caused by one of the services you depend on. You’ll get the alert even if your app returns a non-failure response to your users.

By default, you get a shorter alert mail than this example, but you can switch to this detailed format by selecting “Get more diagnostics…” in Failure Anomalies rule settings:

Can blockchain secure supply chains, improve operations and solve humanitarian issues?

In my last post, I posed the question: What does identity mean in today’s physical and digital world? I was coming off the ID2020 Summit at the UN where we announced our work on blockchain self-sovereign identity. What struck me most was the magnitude of the problems identified and I haven’t been able to stop thinking about solving these already at scale problems ever since. One of the things that Microsoft thinks about when it looks at products is solving problems at scale. It is and has always been a mass market product juggernaut. Yesterday we strove for a vision of “a PC on Every Desk” and today we look to Azure, our hyperscale cloud to solve the world’s productivity problems at scale through massive compute, memory and storage workloads operating at a nice little hum in datacenters that span the globe. But I digress.

In my last post, I posed the question: What does identity mean in today’s physical and digital world? I was coming off the ID2020 Summit at the UN where we announced our work on blockchain self-sovereign identity. What struck me most was the magnitude of the problems identified and I haven’t been able to stop thinking about solving these already at scale problems ever since. One of the things that Microsoft thinks about when it looks at products is solving problems at scale. It is and has always been a mass market product juggernaut. Yesterday we strove for a vision of “a PC on Every Desk” and today we look to Azure, our hyperscale cloud to solve the world’s productivity problems at scale through massive compute, memory and storage workloads operating at a nice little hum in datacenters that span the globe. But I digress.

The important question is: How do we take this DNA and think about societal problems that already exist at scale? We have proven that most technology in one form or another can perform when architected for scale. But where and how do we start and then how do we penetrate with swarms of adoption to make meaningful impact on society’s greatest problems?

The important question is: How do we take this DNA and think about societal problems that already exist at scale? We have proven that most technology in one form or another can perform when architected for scale. But where and how do we start and then how do we penetrate with swarms of adoption to make meaningful impact on society’s greatest problems?

I started to think about where the problem space crosses the corporate and enterprise landscape. What if we can link corporate objectives directly to the problem of child labor? What if we can find businesses that might benefit from the exploitation witnessed and eliminate them? Alas, these approaches are too direct and won’t scale as first steps on this journey.

Another approach would be to look at ways we can back into solving the problems. So I began to think more indirectly about the attack surfaces that corporations operate on where there might be child labor, trafficking or other infractions. If we could identify large attack surfaces in a specific industry that might be a good starting point. This led me squarely to an industry I know very well that has been struggling to evolve ever since Amazon entered their playground: Retailers, Brands, and Ecommerce sites. The landscape gives us an unprecedented opportunity to maximize coverage via a corporate attack surface trifecta:

1. Retailers: think Macy’s, Nordstrom, Best Buy

2. Brands: think Perry Ellis, Nike, Under Armor

3. Ecommerce: Amazon, Tmall, and all of the retail.com variants like Macys.com

The one thing they all have in common is Supply Chain.

Their supply chain ends in the developed world with retail stores to shippers, truck drivers, dock and port workers and their employers. It extends back to overseas warehouses, distribution centers and all of their laborers, whether contracted, full time or part time or temporary workforce. The origin of the supply chain extends all the way back to the factory and local shippers handling the goods.

Take the case of a company like Perry Ellis that is tagging each item of clothing at the manufacturing factory, called source tagging. When a worker hangs a tag on a piece of clothing or sews an RFID tag into a pair of jeans the life of the tracked good begins. Retailers and brands have been evaluating and deploying RFID and other sensor and tracking technology in the Supply Chain and Retail stores for many years. In 2004 I built an RFID Practice at IBM Global Services. That year Walmart demanded all of its suppliers tag their products with RFID.

Take the case of a company like Perry Ellis that is tagging each item of clothing at the manufacturing factory, called source tagging. When a worker hangs a tag on a piece of clothing or sews an RFID tag into a pair of jeans the life of the tracked good begins. Retailers and brands have been evaluating and deploying RFID and other sensor and tracking technology in the Supply Chain and Retail stores for many years. In 2004 I built an RFID Practice at IBM Global Services. That year Walmart demanded all of its suppliers tag their products with RFID.

So, like other blockchain projects, this begs the question, why now? What is so special about this technology that lets us simultaneously add value to Macys.com and Perry Ellis while starting to chip away at one part of the un-identified, trafficked or exploited world population?

I believe the answer lies in a very subtle tweak to the existing tracking systems that are being deployed across the entire retail and ecommerce landscape. What if we could use something like RFID chips and scanners to securely and provably identify every touchpoint of every piece of product all along the supply chain? Why does that add value if we are sort of doing that already?

A little history. The reason tracking tags like RFID are being deployed by brands and retailers is to be able to effectively compete with Amazon.com. What is the single biggest competitive threat a retailer has against Amazon? The answer is a seemingly simple feature on a website:

Underneath what looks like a simple ecommerce site feature lies a very big problem: Inventory Transparency. Retailers cannot effectively invoke this competitive advantage without Inventory Transparency. Retailers cannot achieve Inventory Transparency without RFID or other tracking tags.

RFID systems today simply allow you to track inventory at each waypoint in the supply chain and all the way through the retail store stockroom, floor and checkout. On any given day, you can tell in which part of your supply chain or store replenishment process your product is located. Part of the challenge is that many systems deployed today don’t do a good job providing visibility tracking all the way through the supply chain. This is partly because of siloed tracking systems and databases that live in a multitude of legal entities.

So why is it that even with all of this tracking, there is still a high percentage of lost product due to fraud?

How can a simple identity tweak improve this situation for retailers and brands while simultaneously chipping away at child exploitation?

Enter the trust protocol we call blockchain and specifically blockchain self-sovereign identity. The state change is to plug the holes in your supply chain by identifying and provably tracking every scan at a waypoint by a device or human being operating in your supply chain. This starts with the factory worker who sews the RFID tag into the jeans to the Distribution Center RFID Door reader to the employees or contractors at the DC. It extends to the entities involved, the factory owner, the Contractor that employs the DC contractors, etc. By doing this you create a closed system of verifiably known actors across the supply chain. Blockchain also lets us create a shared single ledger of truth across these independently owned waypoints, driving forward full supply chain inventory transparency. We are using this concept in a number of projects to reduce fraud in other industries. This use case meets my sniff test criteria for blockchain value in a project.

Blockchain can provide value if:

1. There is fraud or lack of trust in the system

2. There are multiple parties to a transaction

This identity tweak takes a corporate system used for tracking goods and transforms it into a system that can reduce fraud, operating costs, and product loss while simultaneously reducing reliance on exploited people, not to mention new opportunities for supply chain financing and insurance.

Should a box of Perry Ellis jeans fall out of the supply chain somewhere, it will immediately be known when it does not reach the next waypoint in the expected time. The forensic investigation will start with the prior transaction that recorded the custody of that item at the prior touch point. A blockchain identity creates the provable record of who last touched the product. This factor holds workers and contracting companies accountable. Reputation gets asserted or attested for that worker and that reputation is rolled up to the Contracting company to create a reputation score for the vendor.

The result is that Supply Chain Contractors will stop hiring unverified or reputationally attested high risk workers. This system of compliance and rating will force Supply Chain workers to be held accountable for their actions therefore reducing fraud (the corporate benefit) while simultaneously requiring a worker to register a self-sovereign identity that travels with them from job to job. This small tweak to existing RFID deployments paid for by corporations will drive undocumented workers from the system over time thus beginning the process of chipping away at the 1.5B undocumented and exploited people identified by the UN’s Sustainable Development Goal 16.9.

therefore reducing fraud (the corporate benefit) while simultaneously requiring a worker to register a self-sovereign identity that travels with them from job to job. This small tweak to existing RFID deployments paid for by corporations will drive undocumented workers from the system over time thus beginning the process of chipping away at the 1.5B undocumented and exploited people identified by the UN’s Sustainable Development Goal 16.9.

Is this the beginning of an opportunity to offer a “Verifiably Clean Supply Chain Certification” for brands, retailers and ecommerce sites? It is certainly achievable technically. My thesis is even if social responsibility isn’t a big enough reason, there are enough financial reasons to move forward. To this end we have launched Project Manifest and made recent announcements with partners like www.Mojix.com and ID2020. More details in my next post.

Azure SQL Data Warehouse: Secondary indexes on column store now available

In Azure SQL Data Warehouse, you can now create secondary B-Tree indexes on column store tables.

Most analytic queries aggregate large amounts of data and are served well by scanning the column store segments directly. However, there is often a need to look for a "needle in a haystack," which translates to a query that does a lookup of a single row or a small range of rows. Such lookup queries can get an improvement in response time in orders of magnitude (even 1,000 times) and potentially run in sub-second if there is a B-Tree index on the filter column.

To create a secondary index on a column store table, follow the same syntax as the generic Create Index Transact-SQL statements.

Updates for OneNote Class Notebook, Learning Tools and Office Lens—plus stickers!

The OneNote team continues to listen to teachers to learn about how we can help them be more productive with our software. Today, we are announcing a set of improvements across OneNote Class Notebook, Learning Tools and Office Lens. All these improvements have come directly from our regular discussions with teachers and include:

- The availability of Class Notebook Tools on more platforms.

- Updates for Learning Tools for OneNote—new languages and more.

- The ability for teachers to give stickers to students in their Class Notebooks.

- New embeddable content types from partners.

- Updates for the OneNote Class Notebook and LMS.

- The availability of Office Lens for iPad.

Class Notebook Tools—now available for OneNote Online and the Windows 10 app

We’re excited to announce that OneNote Class Notebook Tools are now available for OneNote Online and OneNote for Windows 10 to help teachers work even more efficiently across more platforms!

Class Notebook Tools for OneNote Online.

Class Notebook Toolbar for OneNote for Windows 10.

With the Class Notebook Tools for OneNote Online and OneNote for Windows 10, you can do the following:

Distribute a page or section to your students—Rather than sending students to the Content Library to copy a page for themselves, teachers can distribute a page to all students in a class with just two clicks.

Review student work sequentially—Just like in the Class Notebook add-in for OneNote 2013 or 2016, teachers are provided with a list of all the pages they’ve distributed and a quick way to sequentially review work (homework, assignments, quizzes, etc.). Simply click a student’s name to navigate to the page in the student’s notebook.

OneNote Online is rolling out today to Office 365 Education customers, with the OneNote for Windows 10 update available in the coming days in the Windows Store, and you will be able to download the OneNote Windows 10 app and enable the feature through settings in the Options menu.

For more details, visit the Getting Started guides for Class Notebook Tools for OneNote Online or Class Notebook Tools for OneNote for Windows 10.

Learning Tools updates—new languages and more

We have been hard at work rolling out Learning Tools across new apps and platforms—including Word desktop, Word Online, OneNote Online and Office Lens for the iPhone. At the same time, we’ve been listening to teachers and students and making improvements to the original Learning Tools add-in for OneNote. Our international audiences expressed the desire to have Learning Tools in more languages, as well as the ability to use dictation in more languages, and are pleased to launch two improvements in this area:

- The Learning Tools user interface is now localized in six new languages: Hindi, Japanese, Korean, Thai, Chinese (Simplified) and Chinese (Traditional).

- Support for three new dictation languages: Russian, Portuguese (Brazil) and Chinese.

Support for three new Dictation languages—Chinese, Portuguese and Russian.

We also heard the desire to have explicit punctuation when dictating. For example, saying “period” now translates to “.” and “question mark” translates to “?”

In addition to these improvements, we made a few other enhancements:

- Now, when IT admins deploy Learning Tools, the Update button is only displayed on client machines that have rights to install updates.

- Implemented several bug fixes and performance improvements.

For feedback, you can always drop us a line at learningtools@onenote.uservoice.com.

Fun with stickers

A top request by teachers was to add some “fun” when giving feedback to students in OneNote. Now teachers can give students stickers in OneNote—as well as easily add a personalized message to the sticker. In addition, we plan to release new stickers packs throughout 2017—so keep an eye out for more “fun” way to give students feedback.

Stickers are available today in the OneNote Online app and will be available in the coming weeks for the OneNote Class Notebook add-in for desktop (2013 or 2016) and Windows 10 app.

New embeddable content from third-party partners

Last year, we announced that OneNote supports embedding interactive content such as YouTube, Vimeo, Slideshare, Sway, Docs.com and Office Mix. Today, we are announcing support for a new set of partners to embed content onto the OneNote canvas including:

To view all of our supported embed content, visit this public OneNote notebook, created by @OneNoteC.

Quizlet support in OneNote.

Geogebra support in OneNote Online.

OneNote Class Notebook and LMS updates

We recently announced these updates to OneNote Class Notebook:

- Read/Unread support for page distributions and assignments in the Class Notebook add-in for OneNote.

- NewLMS’s supported in the Class Notebook add-in for OneNote.

In addition, we are about to launch new assignment and grade support for the Skooler LMS in the next update of the Class Notebook add-in for OneNote desktop. You can learn more at our Education Partner page. We plan to continually improve these tools and deliver on top requests in 2017, just as we did in 2016. Check out the 2016 recap for OneNote.

Please don’t hesitate to email us directly at classnotebook@onenote.uservoice.com, where the OneNote Class Notebook product team reads through every piece of feedback received. We’re excited for teachers to try out these new improvements and look forward to hearing feedback!

Office Lens for iPad and the Immersive Reader

We are also announcing the availability of Office Lens for iPad. Shipping together with an improved version of Office Lens for iPhone, both apps have Learning Tools built right in. Scan documents and whiteboards with your iPad or iPhone camera. Launching the Immersive Reader lets you hear the text from the printed page read back to you. The words are highlighted as they’re read to make them accessible for all students.

With the bigger screen on the iPad, going from physical to accessible digital content is easier and more compelling than ever. And, as always, you can save the content into OneNote and OneDrive easily. You can get Office Lens today in the iPad Store.

Download Office Lens for free on your Windows, iOS or Android device today!

The post Updates for OneNote Class Notebook, Learning Tools and Office Lens—plus stickers! appeared first on Office Blogs.

Forrester’s 2016 Enterprise Collaboration Wave report is out and Microsoft is a Leader!

Forrester—one of the most influential research and advisory firms in the world—recently released their latest report evaluating enterprise collaboration providers, and we’re pleased to share that Microsoft is named a Leader in the report.

We believe different groups have different needs when it comes to collaboration, and that’s why Office 365 is designed to give teams a choice in collaboration tools. The report examined collaboration with an emphasis on enterprise-wide knowledge sharing, and we’re honored to see Microsoft and Yammer—as part of the Office 365 suite—in the Leaders designation in the report.

Yammer is the social network for work and is included in Office 365. Over the past few years, we’ve worked to integrate Yammer more deeply into Office 365, both to extend the Yammer experience to other applications in Office 365, and to bring some of those other experiences into Yammer.

Just last fall, we announced Yammer will soon integrate with Office 365 Groups, which means every new Yammer group will get a corresponding team site for file sharing, a OneNote for shared notetaking and a team project plan with Planner. With these enhancements and many others to follow in the months ahead, we’re making Yammer better and better at connecting people and teams across your organization.

You can read and download the full Forrester Wave™: Enterprise Collaboration, Q4 2016 report.

The post Forrester’s 2016 Enterprise Collaboration Wave report is out and Microsoft is a Leader! appeared first on Office Blogs.

The week in .NET – On .NET with David Pine, PwdLess, Terraria

To read last week’s post, see The week in .NET – On .NET with Reed Copsey, Jr., Orchard Harvest, Ammy, Concurrency Visualizer, Eco.

On .NET

Last week, David Pine was on the show to talk about his magic mirror, a screen in a mirror, that can display useful information such as his schedule for the day, the weather forecast, and much more. The mirror uses a Raspberry Pi 3 running Windows 10 IoT Core, and runs a custom, open-source UWP application. It also has a camera, microphone, and sound bar, enabling voice-based interactions.

This week, we’ll talk about the year ahead for .NET. The list of guests is still TBD as I’m writing this, but I hope to have some good surprises. We’ll take questions on Gitter, on the dotnet/home channel and on Twitter. Please use the #onnet tag. It’s OK to start sending us questions in advance if you can’t do it live during the show.

Package of the week: PwdLess

Passwords suffer from many issues, and their efficacy is to be doubted more and more with each mass breach, some of which leaked hundreds of millions and up to a billion passwords. There are alternatives to passwords, however, that may be appropriate for your applications.

One such alternative is “magic links”, that are nonces that the application usually sends to an email address or phone number that is known to belong to the user.

Even though PwdLess is built with .NET, it’s usable from any platform through its simple HTTP API. GET /auth/sendNonce?identifier=[IDENTIFIER] sends the nonce, and GET /auth/nonceToToken?nonce=[NONCE] responds 200 with the JWT if the nonce is valid.

PwdLess configuration is done through a simple JSON file:

Game of the week: Terraria

Terraria is an incredibly popular 2D adventure-survival game that blends classic action game mechanics with sandbox style freedom. In Terraria, players dig, fight and explore the world gathering materials that can be used to craft gear, machinery, and dwellings. You can seek out foes that grow in difficulty as you build up your very own city, giving allies that you encounter along your travels a place to stay. Terraria features randomly generated open worlds, a vast amount of weapons and armor, and numerous crafting options.

Terraria was created by Re-Logic using C# and XNA. It is available on Steam for Windows and Mac, Xbox 360, Xbox One, PlayStation 3, PlayStation 4, PSVita, Android and iOS.

User group meeting of the week: migrating from TFS to the cloud in Sydney

On Wednesday, January 18 at 6:30PM, the Sydney .NET User Group will have a session with Danijel Malik on migrating from TFS to the VSTS portal.

.NET

- What .NET Developers ought to know to start in 2017 by Scott Hanselman.

- Essential .NET – Essential MSBuild: a build engine overview for .NET tooling by Mark Michaelis.

- Engineering changes for corefx by Wes Haggard.

- Smarter build scripts with MSBuild and .NET Core by Matthew Abbott.

- Faking out the .NET Runtime version by Rick Strahl.

- My first ScriptCS by Shawn Wildermuth.

- Implement IDisposable by jbe2277.

- Analysing pause times in the .NET GC by Matt Warren.

- Visual Studio 2017 and Visual Studio 2015 with .NET Core by Christian Nagel.

- C# code formatting settings in VS Code and OmniSharp by Filip W.

- Analyzing GitHub LINQ usage – the results by Dror Helper.

- VSTS and MSBuild (v15) by Darren Neimke.

ASP.NET

- An introduction to ViewComponents – a login status view component by Andrew Lock.

- Getting down to business building an ASP.NET Core API service by Rick Strahl.

- When a single ASP.NET client makes concurrent requests for writeable session variables by Sanjay Patel.

- .NET Core and NancyFX: can writing a WebApi get any simpler? by Carlos Mendible.

- Enabling gzip compression with ASP.NET Core by Gérald Barré.

- Standardize page objects with Visual Studio item templates by Anton Angelov.

- File logging on ASP.NET Core by Gunnar Peipman.

F#

- F# has won me over: coming to .NET world from outside .NET, by Tom Prior.

- New release of @fsibot, now on Azure Functions, by Matthias Brandewinder.

- You too can build Xamarin apps with F# , by Greg Shackles.

- Pairwise distance calculation on the GPU, by Xiang Zhang.

- Estimating Pi on the GPU, by Xiang Zhang.

Check out F# Weekly for more great content from the F# community.

Xamarin

- What Xamarin developers ought to know to start 2017 by James Montemagno.

- Xamarin Alpha Preview 7: Cycle 9 by Adrian Murphy.

- Webinar series: Xamarin University presents getting started with Xamarin by Bryan Costanich.

- The top 12 Xamarin blog posts of 2016 by Courtney Witmer.

- Start the new year with Xamarin developer events by Jayme Singleton.

- The Xamarin show: getting started with MVVM&snack pack 6: managing Android SDKs by James Montemagno.

- Securing Mac application with Touch ID by Prashant Cholachagudda.

- Jumpstart your Xamarin app development by Sam Basu.

- Designing a responsive music player in sketch (Part 1) by Christian Krammer.

- Multi-targeting the world: a single project to rule them all by Oren Novotny.

- Adding databound pickers to a ListView by Jesse Liberty.

Azure

- Azure Mobile Apps .NET SDK releases by Adrian Hall.

- Announcing the Azure Tools Extension for Visual Studio Code by Brady Gaster.

- Create you first ASP.NET Core App and host it in a Linux Docker container on Microsoft Azure Part 1, Part 2, and Part 3 by Malte Lantin.

- Azure Storage Queues new feature: pop-receipt on add message by Sercan Guler.

UWP

- Join us on Feb 8th for Windows Developer Day – Creators Update livestream by Windows Apps Team.

- Adafruit Class Library for Windows IoT Core by Rick Lesniak.

- Modern Apps – Exploring the UWP Community Toolkit by Frank La Vigne.

- Using custom fonts in C# UWP apps by Martin Zikmund.

- Hitchhiking the HoloToolkit-Unity, Leg 9–Holes in the Walls by Mike Taulty.

- Windows 10, UWP, IoT Core, SpeechSynthesizer, Raspberry PI and ‘Audio Popping’ by Mike Taulty.

Data

- Paging with Entity Framework Core by Gunnar Peipman.

- What’s New in Entity Framework Core 1.1 by Ricardo Peres.

Games

- Unity Navigation – Part 1 by Stacey Haffner.

- How I taught people to play my game in 8 steps.

- Character design – some tips and tricks by Chris Hildenbrand.

- Why Angry Birds is so successful and popular: a cognitive teardown of the user experience by Charles Mauro.

- Developer perspectives: lessons from E McNeill by E McNeill.

- Why adventure games rock – Part 1 by Bryce Covert.

- Curated #UnityTips No. 28 by DevDog January 2017 by DevDog.

- Unity UFPS tutorial: enemy hit & damage animation by Jay AnAm.

And this is it for this week!

Contribute to the week in .NET

As always, this weekly post couldn’t exist without community contributions, and I’d like to thank all those who sent links and tips. The F# section is provided by Phillip Carter, the gaming section by Stacey Haffner, and the Xamarin section by Dan Rigby, and the UWP section by Michael Crump.

You can participate too. Did you write a great blog post, or just read one? Do you want everyone to know about an amazing new contribution or a useful library? Did you make or play a great game built on .NET?

We’d love to hear from you, and feature your contributions on future posts:

- Send an email to beleroy at Microsoft,

- comment on this gist

- Leave us a pointer in the comments section below.

- Send Stacey (@yecats131) tips on Twitter about .NET games.

This week’s post (and future posts) also contains news I first read on The ASP.NET Community Standup, on Weekly Xamarin, on F# weekly, and on Chris Alcock’s The Morning Brew.

Cumulative Update #4 for SQL Server 2016 RTM

The 4th cumulative update release for SQL Server 2016 RTM is now available for download at the Microsoft Downloads site. Please note that registration is no longer required to download Cumulative updates.

To learn more about the release or servicing model, please visit:

- CU#4 KB Article: https://support.microsoft.com/en-us/help/3205052

- Understanding Incremental Servicing Model for SQL Server

- Microsoft® SQL Server® 2016 RTM Latest Cumulative Update: https://www.microsoft.com/en-us/download/details.aspx?id=53338

- Update Center for Microsoft SQL Server: http://technet.microsoft.com/en-US/sqlserver/ff803383.aspx

Cumulative Update #1 for SQL Server 2016 SP1

The 1st cumulative update release for SQL Server 2016 SP1 is now available for download at the Microsoft Downloads site. Please note that registration is no longer required to download Cumulative updates.

To learn more about the release or servicing model, please visit:

- CU#1 KB Article: https://support.microsoft.com/en-us/help/3208177

- Understanding Incremental Servicing Model for SQL Server

- Microsoft® SQL Server® 2016 SP1 Latest Cumulative Update: https://www.microsoft.com/en-us/download/details.aspx?id=54613

- Update Center for Microsoft SQL Server: http://technet.microsoft.com/en-US/sqlserver/ff803383.aspx

Cumulative Update #7 for SQL Server 2012 SP3

The 7th cumulative update release for SQL Server 2012 SP3 is now available for download at the Microsoft Downloads site. Please note that registration is no longer required to download Cumulative updates.

To learn more about the release or servicing model, please visit:

- CU#7 KB Article: https://support.microsoft.com/en-us/help/3205051

- Understanding Incremental Servicing Model for SQL Server

- Microsoft® SQL Server® 2012 SP3 Latest Cumulative Update: https://www.microsoft.com/en-us/download/details.aspx?id=50733

- Update Center for Microsoft SQL Server: http://technet.microsoft.com/en-US/sqlserver/ff803383.aspx

Last Cumulative Update #16 for SQL Server 2012 SP2

The 16th cumulative update release for SQL Server 2012 SP2 is now available for download at the Microsoft Downloads site. Please note that registration is no longer required to download Cumulative updates.

To learn more about the release or servicing model, please visit:

- CU#16 KB Article: https://support.microsoft.com/en-us/help/3205054

- Understanding Incremental Servicing Model for SQL Server

- Microsoft® SQL Server® 2012 SP2 Last Cumulative Update: https://www.microsoft.com/en-us/download/details.aspx?id=50731

- Update Center for Microsoft SQL Server: http://technet.microsoft.com/en-US/sqlserver/ff803383.aspx

Getting started with the Technical Preview of Power BI reports in SQL Server Reporting Services

Today’s post will help guide you through how to set up the January 2017 Technical Preview of Power BI reports in SQL Server Reporting Services on your own PC or server. Make sure whatever machine you want to install the preview meets the same hardware requirements we had for SQL Server 2016.

– Read Riccardo’s post announcing the Technical Preview

– Download the January 2017 Technical Preview

Before you begin

One change you’ll notice with the new installer is that you do not need a SQL Server Database Engine server available at the time of install, and one is not installed for you as part of the wizard. You will need one to configure Reporting Services after installation, however, so make sure you have a SQL Server database available for this. In addition, if you want to test the new Power BI report functionality, you need to connect to an external Analysis Services server, so you’ll need SQL Server Analysis Services installed and available for use as well. Keep in mind the version of SQL Server Analysis Services needs to be SQL Server 2012 SP1 CU4 or later.

If you need a copy of SQL Server to try, you can get SQL Server 2016 Evaluation or try SQL Server vNext CTP1.1.

If you’d prefer to use a pre-configured environment instead of setting it up yourself, you can use the updated Virtual Machine in the Azure Marketplace.

If you need a sample Analysis Services model, you can use one of the following Adventure Works sample files to get started. Select the sample that matches the mode you have SQL Server Analysis Services running in (Tabular or Multidimensional).

- Download the Adventure Works Internet Sales Tabular Sample Database

- Download the Adventure Works Multidimensional Models

Set up your new report server

1. Find the location where you downloaded the SQLServerReportingServices.exe file and launch the installer.

2. Select Options to change the install path for Reporting Services.

Note: The default path is C:\Program Files\Microsoft SQL Server Reporting Services.

3. Read and agree to the license terms and conditions and then select Install.

4. After a successful setup, select Configure Report Server to launch the Reporting Services Configuration Manager.

After you select Configure Report Server in the setup, you will be presented with Reporting Services Configuration Manager. For more information, see Reporting Services Configuration Manager.

You will now need to create a report server database in order to complete the initial configuration of Reporting Services. A SQL Server Database server is required to complete this step. It is recommended for testing and development purposes you have this on the same machine as the one you install Reporting Services on.

Start creating reports with Power BI Desktop

As Riccardo discussed in yesterday’s post, you’ll need to use the preview version of Power BI Desktop we made available to publish content to the preview server you’ve set up. You will see both a 32 and 64-bit version of Power BI Desktop available for download. Unless you are using a 32-bit version of Windows on your local PC, the 64-bit version should be the one you install and use. This can be installed locally on your PC, and will run side-by-side with the publicly released version of Power BI Desktop. The workflow for creating and publishing reports to SSRS is almost identical to the one we first walked through when we released the Azure VM in October. Please refer to the walkthrough we put together at that time if you have any questions –

- Create Power BI reports in the SQL Server Reporting Services Technical Preview

- Download the preview version of Power BI Desktop

MSDN Documentation now available

There are several articles on MSDN now available to discuss what’s new in this release and walk you through this and some other scenarios with the preview release. You can access them using the links below –

- What’s new in Reporting Services (SSRS)

- Technical Preview of Power BI reports in SSRS – Release notes

- Install the January 2017 Technical Preview of Power BI reports in SQL Server Reporting Services

- Configure Kerberos to use Power BI reports

- Power BI reports in Reporting Services

Try it now and send us your feedback

- Download the January 2017 Technical Preview

- Try the Virtual Machine in the Azure Marketplace

- Post in the Reporting Services forum (or if you prefer, send us an email)

- Join the conversation on Twitter: @SQLServerBI, #SSRS

Azure Machine Learning Now Available in West Central US

This post is authored by Ted Way, Senior Program Manager at Microsoft.

We are pleased to announce that Azure Machine Learning is now generally available in the West Central United States (WCUS) Azure region. With this, Azure ML is now available in these regions:

- United States

- East US2

- South Central US

- NEW: West Central US

- Europe

- West Europe

- Germany Central

- Asia Pacific

- Japan East

- Southeast Asia

Migrating Experiments

You can create new workspaces and experiments in WCUS, of course. If you have experiments in other regions that you now wish to migrate to WCUS, you can use the Copy-AmlExperiment cmdlet in PowerShell or publish an unlisted experiment in the Gallery (in the documentation, search for “have it only accessible to people with the link”). This will provide access to your experiment only to the people you share the URL with. Click on the link to get to the experiment, and then click “Open in Studio” to choose to “West Central US” region.

If you use Free or Guest Access workspaces, they will continue to be created and operated out of the South Central US region.

Deploying Web Services

Whether you desire a closer location to reduce latency or take advantage of another region in North America for high availability, you now have the option to run your web services in WCUS. If you do not want to migrate your experiment but only wish to deploy a web service to WCUS, open a Studio workspace running in any region and create a predictive experiment. Click “Deploy Web Service” and select “Deploy Web Service [New] Preview.”

Once you are in the new web service management portal (in preview), select “Web Services” at the top. Click on the web service you want to copy, and then select “Copy” in the tab. Choose “West Central US” as the region, and then click the “Copy” button. This will create a copy of the web service in WCUS that you can then use just like any other Azure ML web service.

We look forward your feedback or comments at the Azure ML forum.

Ted

@tedwinway

![clip_image001[4] clip_image001[4]](http://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/ae87724e-bfe2-4392-a277-510c553660c8.gif)