Today, we’re pleased to announce several enhancements that bolster Office 365’s security and compliance capabilities.

With the launch of Office 365 Threat Intelligence, we are enriching security in Office 365 to help customers stay ahead of the evolving threat landscape. Today, we’re also introducing a new reporting interface to improve the customer experience for Advanced Threat Protection (ATP) and extending the ATP Safe Links feature to Word, Excel and PowerPoint for Office 365 ProPlus desktop clients.

Office 365 Advanced Data Governance also launches today, providing our customers with robust compliance capabilities. A new policy management interface for Data Loss Protection (DLP), helps Office 365 customers remain compliant and in control of their data.

Let’s take a closer look at these enhancements.

Enhancing threat protection—a path to proactive cyber-defense with Office 365 Threat Intelligence

According to a recent Ponemon Institute study,* the average cost of a data breach has risen to $4 million, with costs incurred for litigation, brand or reputation damage, lost sales—and in some cases—complete business closure. Staying ahead of threats has never been more important.

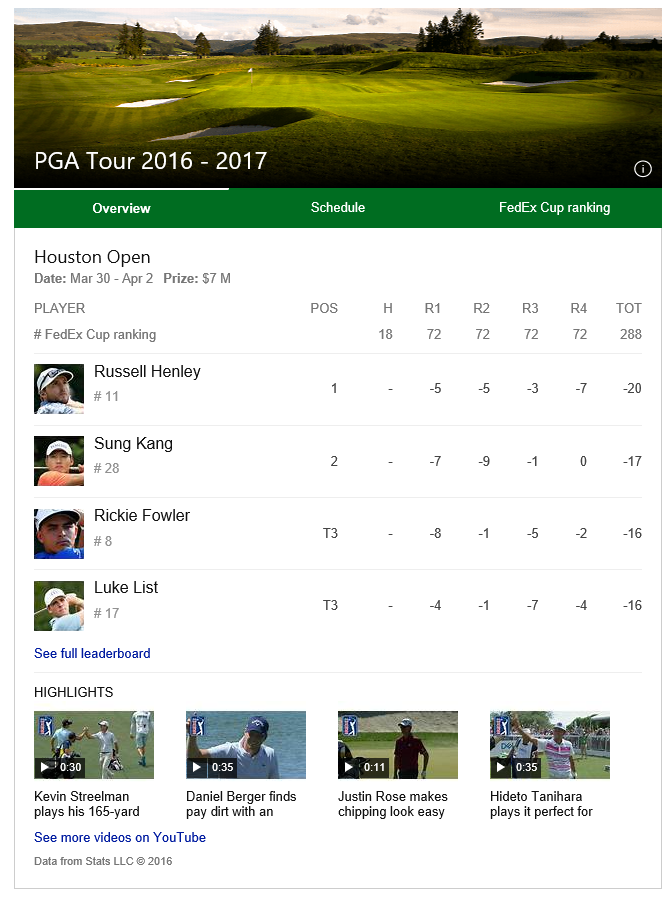

Office 365 Threat Intelligence, now generally available, provides:

- Interactive tools to analyze prevalence and severity of threats in near real-time.

- Real-time and customizable threat alert notifications.

- Remediation capabilities for suspicious content.

- Expansion of Management API to include threat details—enabling integration with SIEM solutions.

To provide actionable insights on global attack trends, Threat Intelligence leverages the Microsoft Intelligent Security Graph, which analyzes billions of data points from Microsoft global data centers, Office clients, email, user authentications, signals from our Windows and Azure ecosystems and other incidents that impact the Office 365 ecosystem.

It provides information about malware families, both inside and outside your organization, including breach information with details down to the actual lines of code that are used for certain types of malware. Threat Intelligence also integrates seamlessly with other Office 365 security features, like Exchange Online Protection and ATP—providing you an analysis that includes the top targeted users, malware frequency and security recommendations related to your business.

For an overview of Threat Intelligence, watch the following video:

Threat Intelligence is included in the Office 365 Enterprise E5 plan or as a standalone service. Visit Threat Intelligence—Actionable insights for global threats to learn more.

New Office 365 Advanced Threat Protection (ATP) reporting interface

The new reporting interface for Office 365 Advanced Threat Protection (ATP) reports is now available in the Office 365 Security & Compliance Center. These security reports provide insights and trends on the health of your organization, including information about malware and spam sent or received in your organization and advanced threat detections that Office 365 ATP helped discover and stop.

Using the new report interface, admins can schedule reports to be sent directly to their inbox, request custom reports and download or manage these reports through dashboards in the Security & Compliance Center. In our continued journey to provide our customers with the most powerful and robust advanced security solution, this new reporting interface helps you understand how ATP mitigates today’s most sophisticated threats from impacting your organization.

![]()

The new ATP reporting interface.

Extending ATP Safe Links to Office 365 ProPlus desktop clients

Later this month, we will enable ATP for Office 365 ProPlus desktop clients, a unique demonstration of the power of collaboration across the Microsoft ecosystem. As cyber criminals broaden the scope of attacks beyond email workloads, it’s necessary to extend security capabilities beyond email. The Safe Links feature in ATP protects customers from malicious links in email.

Safe Links is integrated across Outlook desktop, web and mobile to help protect a user’s inbox across devices. When a user clicks a link in an Office 365 client application (Word, Excel or PowerPoint), ATP will inspect the link to see if it is malicious. If the link is malicious, the user will be redirected to a warning page instead of the original target URL, protecting the user from compromise. This new capability will further integrate and expand security across Office 365. Our intent has always been to provide our customers with an end-to-end, unified and secure experience across all of Office 365, and this extended capability of Safe Links is an example of our continued step toward this goal.

Ensuring compliance—why Office 365 Advanced Data Governance matters

As the amount of electronic data grows exponentially, many organizations are exposing themselves to risk by retaining unnecessary data. For example, many organizations continue to retain the personal information of former employees who left the company long ago. If this data were compromised in a breach, the company could be liable for costly remediation, such as lifetime credit monitoring for these former employees.

Office 365 Advanced Data Governance applies machine learning to help customers find and retain important data while eliminating trivial, redundant and obsolete data that could cause risk if compromised.

Advanced Data Governance, also now generally available, delivers the following capabilities:

- Proactive policy recommendations and automatic data classifications that allow you take actions on data—such as retention and deletion—throughout its lifecycle.

- System default alerts to identify data governance risks, such as “Unusual volume of file deletion,” as well as the ability to create custom alerts by specifying alert matching conditions and threshold.

- The ability to apply compliance controls to on-premises data by intelligently filtering and migrating that data to Office 365.

Customers are already seeing value from Advanced Data Governance. Tom Stauffer, vice president of Records and Information Management for the Walt Disney Company, says:

“Effective governance of unstructured information across communication, content and social platforms has long been a goal of organizations. Microsoft Office 365 Advanced Data Governance appears to provide a well-thought-out solution that is integrated into their entire Office 365 suite. This functionality and integration provides the powerful potential of delivering on this long-sought-after goal, and doing so without a major burden to end users.”

In the coming months, we will be delivering additional Advanced Data Governance enhancements, such as event based retention, manual disposition and supervision.

Learn more about Advanced Data Governance in this episode of Microsoft Mechanics:

Office 365 Advanced Data Governance is included in the Office 365 Enterprise E5 plan. It is also available as part of the Office 365 Advanced Compliance plan—which also includes Office 365 Advanced eDiscovery and Customer Lockbox to provide a comprehensive set of expanded compliance value.

For a deeper demo of Office 365 Advanced Data Governance, watch this presentation.

For additional information about Advanced Data Governance, please see these TechNet articles:

Enhanced Office 365 Data Loss Prevention (DLP) management experience

Customers all over the world use Data Loss Prevention (DLP) policies in Office 365 to help prevent sensitive information from getting into the wrong hands. Because of your feedback, we put DLP management front and center, providing quick access to content protection policies, app permissions and device security policies—all in one place.

It’s now easier than ever to configure and enforce sensitive data policies across your organization using the new DLP management experience in the Office 365 Security & Compliance Center. The new Policy page shows you important information about your current DLP policies at a glance, with detailed audit reports just a click away. It’s also easier to turn on and configure DLP—simply choose what you want to protect, then specify any special conditions to look for and the automatic actions you want to enforce to protect your important data. You can also go into the advanced settings to access additional customization and configuration options to help meet your specific compliance requirements. Learn more in this article.

![]()

The enhanced DLP management experience makes it easier to create and manage policies.

Join our Security, Privacy & Compliance tech community

These new features help broaden and enhance the scope of security and compliance capabilities within Office 365. To further evolve your organization’s security and compliance with these services, join our Security, Privacy & Compliance tech community. It is a great resource to communicate and learn from your peers, as well as offer your insights on the growing importance of security, privacy and compliance.

*IDC Ponemon Institute, Sponsored by IBM, Cost of a Data Breach Report (2016)

The post Announcing the release of Threat Intelligence and Advanced Data Governance, plus significant updates to Advanced Threat Protection appeared first on Office Blogs.

When one of the world’s leading financial institutions invests in Microsoft Office 365 and Azure, it’s a testament to the confidence business leaders have in the security, privacy, and compliance enabled by the Microsoft Cloud. As a group, AXA has globally adopted our comprehensive cloud services, taking a leadership role in the industry and enabling a modern, secure workplace. Rather than looking at the global deployment of Office 365 as yet another routine upgrade, IT leaders are taking advantage of a cloud platform that empowers employees with innovative tools available on any device.

When one of the world’s leading financial institutions invests in Microsoft Office 365 and Azure, it’s a testament to the confidence business leaders have in the security, privacy, and compliance enabled by the Microsoft Cloud. As a group, AXA has globally adopted our comprehensive cloud services, taking a leadership role in the industry and enabling a modern, secure workplace. Rather than looking at the global deployment of Office 365 as yet another routine upgrade, IT leaders are taking advantage of a cloud platform that empowers employees with innovative tools available on any device.

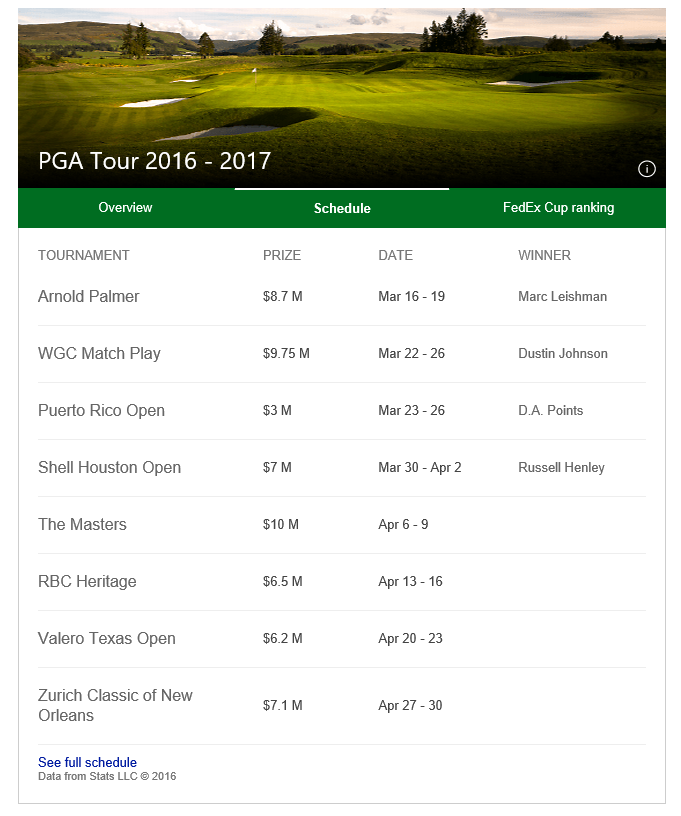

Last year, we announced the #ExcelWorldChamp competition. From October to November 2016, Microsoft ran four rounds of Excel tests for residents of select countries. The top competitors in each round made it through to the next level—until there was one Excel champ from each country!

Last year, we announced the #ExcelWorldChamp competition. From October to November 2016, Microsoft ran four rounds of Excel tests for residents of select countries. The top competitors in each round made it through to the next level—until there was one Excel champ from each country!

by

by