ASP.NET 5 beta8 is now available both on NuGet and as a tooling update to Visual Studio 2015! This release greatly expands the supported surface area of .NET Core on OS X and Linux. You can now use networking, cryptography and globalization features cross-platform! This release also includes some nice improvements to ASP.NET 5, DNX and the Web tools. Let’s take a look at how we can get started with ASP.NET 5 beta8.

Installation

You can find instructions in our documentation for installing ASP.NET 5 beta8 on Windows, Mac and Linux.

New Features

Below is a summary of some of the new features in ASP.NET 5 beta8. For a full list of what is new in this release please refer to the beta8 release notes.

Changes to IIS Hosting Model

We’ve made a major update to the IIS hosting model for ASP.NET 5 in beta8. Up to and including beta7, ASP.NET 5 applications running in IIS have been hosted by a component named "Helios", contained in the Microsoft.AspNet.Server.IIS package. This component facilitated the bootstrapping of DNX and CLR using a hook in the existing System.Web hosting model. This hook replaced the runtime after the application had started (from IIS's point of view). This effectively made "Helios" a second DNX host, meaning it contained its own logic pertaining to locating, booting, and loading the runtime. It also meant a second set of logic to enable things like runtime servicing, as well as configuration of certain DNX-level settings.

Having two distinct hosting models for ASP.NET 5 introduced a number of complexities and inconsistencies that were difficult or impossible to resolve. To address this we're discontinuing the "Helios" IIS host. Hosting ASP.NET 5 applications in IIS will now be achieved using the IIS HttpPlatformHandler configured to forward through to the ASP.NET 5 Kestrel server. The HttpPlatformHandler is a native IIS module that needs to be installed by an administrator on the server running IIS (installers: x86, x64). It’s also already included with the beta8 Web tools update for local development on IIS Express. This native IIS module manages the launching of an external application host process (in this case dnx.exe) and the routing of requests from IIS to the hosted process.

Simplifying the model to a single hosting option (but the same scenarios still supported) means less things for developers to code for and test. Additional benefits of the new model include:

- The IIS AppPool doesn't need to run any managed code (you can literally configure it to not load the CLR at all)

- The existing ASP.NET Windows component does not need to be installed to run on Windows Servers

- Existing ASP.NET 4.x modules can run in IIS alongside the HttpPlatformHandler since the ASP.NET 5 process is separate

- You can set environment variables per process since HttpPlatformHandler supports it. It will make setting things like the ASP.NET 5 environment configuration possible on local IIS servers.

- Unified error handling for startup errors across all servers

- Code and behavior unification

- Support for app.config when running on .NET Framework (full CLR) whether self-hosted or in IIS (no more web.config even for .NET Framework compatibility)

- Unified servicing story

- Unified boot-up story (no odd AspNetLoader.dll in the bin folder)

You’ll notice that the ASP.NET 5 project templates in Visual Studio have been updated to include the following web.config file in the wwwroot folder of your application:

This web.config file adds the HttpPlatformHandler to your application and configures the handler to forward requests to a DNX process. Visual Studio handles setting up the DNX_PATH environment variable to point to the appropriate DNX version for your application.

When you publish your application the process path in web.config is updated to point to the “web” command defined by your application. You can opt to use a different command instead using the --iis-command option when running dnu publish.

For more details on these changes to the IIS hosting model please see the corresponding announcement.

Localization

ASP.NET 5 now has built-in support for localization. The new localization support provides middleware for specifying the correct culture and UI culture on the thread based on the request and also mechanisms for accessing localized content based on the current culture.

You enable localization in your application by adding the request localization middleware to your request pipeline in your Startup class:

app.UseRequestLocalization(options)

The request localization middleware uses a set of configured IRequestCultureProvider implementations to determine the culture for the request. Built-in providers can determine the culture from the request using the Accept-Language header, a query string value, or from a cookie. You can also build and specify your own IRequestCultureProvider.

Once the request localization middleware determines the current culture it sets it on the thread. The IStringLocalizer service then provides access to localized content based on the current culture. You enable support for these localization services like this:

services.AddLocalization(options => options.ResourcesPath = "resources");

The ResourcePath specifies the path where the localized resources are located relative to the application root. You can use the IStringLocalizerFactory service to create an IStringLocalizer for a specific resource or simply request an IStringLocalizer directly.

The default implementations of these services is based on System.Resources.ResourceManager, which supports accessing localized content in satellite assemblies based on resx files. You can alternatively provide your own implementations for accessing localized content from different sources, like form a database.

You can see a full working sample of these localization features in the Localization repo.

Localization and MVC

MVC builds on the new localization support in ASP.NET 5 to enable localization in controllers and views. MVC introduces a few of additional services for localization built on the core localization services.

To enable the MVC specific localization features, you add the following when configuring the MVC services:

services

.AddMvc().AddViewLocalization(options => options.ResourcesPath = "Resources");The IHtmlLocalizer service (with accompanying IHtmlLocalizerFactory) adds support for getting localized HTML strings with property encoded arguments. You can use an IHtmlLocalizer from your controllers like this:

private IHtmlLocalizer SR;

private IHtmlLocalizer SR;

public HomeController(IHtmlLocalizer localizer)

{

_localizer = localizer;

}

public ActionResult Index()

{

ViewData.Message = SR["Localize me!"];

return View();

}

The IViewLocalizer is an IHtmlLocalizer service that looks for a resource based on the current view name. You can inject an IViewLocalizer into your view using the @inject directive, like this:

@inject IViewLocalizer SR @SR["Localized header"]

MVC also provides a LanguageViewLocationExpander that enables the view engine to look for views that are suffixed with a specific culture. For example, you can have index.html and index.en-GB.cshtml. Which view is selected will be based on the current culture.

Error messages from validating data annotations can be localized by adding the following option when setting up the MVC services:

services

.AddMvc()

.AddViewLocalization(options => options.ResourcesPath = "Resources").AddDataAnnotationsLocalization();Any validation error messages from data annotations will then be localized using the available IStringLocalizer service.

DNX Watch command

The dnx-watch command will run your application and then watch all of the project files for changes. When a file is changed the dnx-watch command restarts the application. This enables a rapid development workflow where you edit the code, save, and then refresh your browser to see the changes.

To install the dnx-watch command run the following:

dnu commands install Microsoft.Dnx.Watcher

You can then start the dnx-watch command from the same directory where your project.json is located. Any arguments passed to dnx-watch will get passed along to dnx:

C:\Users\danroth27\Documents\WatchTest\src\WatchTest>dnx-watch web

[DnxWatcher] info: Running dnx with the following arguments: --project "C:\Users\daroth\Documents\WatchTest\src\WatchTest\project.json" web

[DnxWatcher] info: dnx process id: 8348

Hosting environment: Production

Now listening on: http://localhost:5000

Application started. Press Ctrl+C to shut down.

Browse to your application to see the current content:

![]()

Modify your code and save the file:

public IActionResult About()

{ViewData["Message"] = "Watch this!";

return View();

}Refresh the browser to see the changes:

![]()

Better view precompilation

We’ve improved precompilation of MVC views in this release to work seamlessly with tag helpers. You can now use view precompilation even when using custom tag helpers in your views.

Specify target frameworks when publishing

When publishing an application, you can now specify the target framework that you want to publish for:

dnu publish –framework dnx451

This will trim the set of packages included in the published app to just the packages required for the specified target framework resulting in a smaller deployment payload.

Clear the HTTP response cache used for package restore

When restoring packages dnu will normally do HTTP response caching for any requests sent. You can now easily clear the HTTP response cache by running:

dnu clear-http-cache

Target .NET 2.0 and .NET 3.5

You can now target .NET 2.0 and .NET 3.5 in your DNX projects. Simply use the net20 and net35 target framework monikers in the frameworks node of your project.json file.

Running scripts before and after building and creating packages

One of the nice features of DNX is that it will handle building for multiple target frameworks (ex net46, dnxcore50, etc.) and multiple build configurations (ex Debug, Release). In project.json you can define command-line scripts that run before and after each build and package creation. Previously these scripts would only run once, but in this release the scripts will run before each build configuration and each target framework.

For example, if you have two build configurations (Debug and Release) and two target frameworks (net46 and dnxcore50) then the prebuild and postbuild scripts will run four times: once for each build configuration and once for each target framework. The prepack and postpack scripts will run twice: once for each build configuration.

In your prebuild and postbuild scripts you can use the %build.Configuration% and %build.TargetFramework% variable to get access to the current build configuration and target framework. The %build.Configuration% variable is also available in your prepack and postpack scripts.

Add additional files to a package

DNX generally handle generating NuGet packages for your projects for you, but sometimes you need to add additional files to the packages. You can now specify additional content files for your NuGet packages in project.json using the new packInclude property:

"packInclude": {

"destination1/": "source1/**",

"destination2/": "source2/**",

"destination2/some_additional_file.txt": "source2a/somefile.txt",

"destination3/": ["source3/a.txt", "source3/b.txt", "source4/c/**/*.ps1"]

}The packInclude property specifies a set of destinations in the package and the source files that should be included at those destinations. You can use globing patterns when specifying source files to include or list individual files in an array. The destination can be a specific file name and path or it can be a directory. To specify a directory as the destination you include a trailing slash.

When you build your package all of the specified source files will get included in the package at the specified locations.

Explicitly target dependencies at packages or projects

When DNX resolves dependencies specified in project.json it will resolve dependencies as installed packages or as project references. This gives you the flexibility to swap out package dependencies as source code or vice versa. But sometimes you want to be explicit about the target type for the dependence. For example, you may want to make sure that you don’t accidentally resolve a dependency from a configured NuGet feed that should really just be a project reference.

You can now explicitly specify the target type for a dependency in project.json to ensure that the dependency only comes from a package or a project:

"dependencies": {

"ClassLibrary1": { "version": "1.0.0-*", "target": "project" },

"ClassLibrary2": { "version": "1.0.0-*", "target": "package" }

}The target property for ClassLibrary1 indicates that it’s a project reference, not a package. Similarly, the target property for ClassLibrary2 indicates it’s a package, not a project. If the target property is not specified, then the target can be either a package or a project.

Dnvm uninstall

For those of you who have been keeping up with all the latest DNX release updates you probably have quite a few older versions of DNX hanging out on your machine. You can always go into your user profile and delete the folder for a specific DNX from the ~/.dnx/runtimes folder. But now there is an even easier way. The DNVM tool now supports uninstalling specific versions of DNX. Simply run dnvm uninstall to uninstall a specific DNX version from your machine and it will delete the corresponding runtime folder for you.

New Visual Studio 2015 Features for ASP.NET 5

Hiding files in Solution Explorer

In this release we have added the ability to hide files from the default view in Solution Explorer. To hide a file, in Solution Explorer you can use the new Hide from Solution Explorer context menu option. In the image below, you can see the context menu for a file that I’m going to hide from Solution Explorer.

![]()

After using Hide from Solution Explorer the item will no longer show up in Solution Explorer. This is facilitated by adding a new entry into the .xproj file. For the case above the new entry in the .xproj file is.

After the file is hidden from Solution Explorer you will not see it by default. If you need to interact with hidden files you can use the Show All Files button on Solution Explorer. You can see this being used in the screenshot below.

![]()

Info bar when restoring NuGet packages

One piece of feedback from users on the new ASP.NET 5 experience that we have received is that it’s not clear when NuGet packages are being restored. This is because Visual Studio 2015 will automatically restore NuGet packages as needed. For example, when you open a project, if NuGet packages are missing they will be restored. Similarly when you edit project.json to add a new dependency, that will kick off a NuGet restore. There are other times when Visual Studio starts a restore.

To make it more clear when a NuGet package restore operation is in progress we have introduced a new info bar for ASP.NET 5 projects. Now when a NuGet package restore is in progress you’ll see the following info bar in Solution Explorer.

![]()

The info bar at the top of Solution Explorer indicates that a restore is in progress and how many projects are participating in that. Directly from the info bar you can open the output of the restore operation with the Output link. You can also click on Settings so that you can configure the behavior of the info bar. In the settings page you can specify when the info bar should show up.

Show dependency errors in project.json and Solution Explorer

In previous releases if there were any issues with dependencies you would have to go to the output window, or Error List, to see errors and warnings about packages. With this release we have integrated showing dependency errors/warnings directly in project.json and Solution Explorer.

In the image below, I’ve modified the BrowserLink dependency to have an invalid version and also added a new entry to a dependency which doesn’t exist, SomeMissingPackage. In the image below you can see that both of those entries in project.json have squiggles indicating an issue.

![]()

When you hover over an entry in project.json you’ll see the corresponding error/warning message. For example, see the image below.

![]()

Dependency issue are also now indicated in Solution Explorer as well. In the image below you can see the view in Solution Explorer for the sample above.

![]()

In the previous image the icon for the BrowserLink and SomeMissinagePackage have a Warning icon. In addition to this, you can see dependency errors and warnings in the Error List.

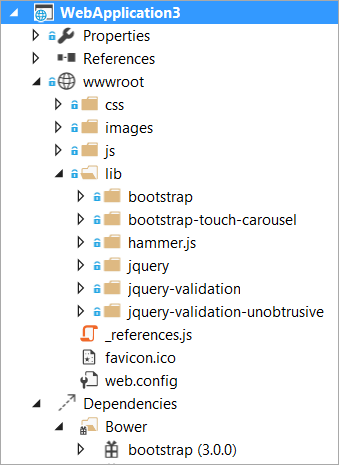

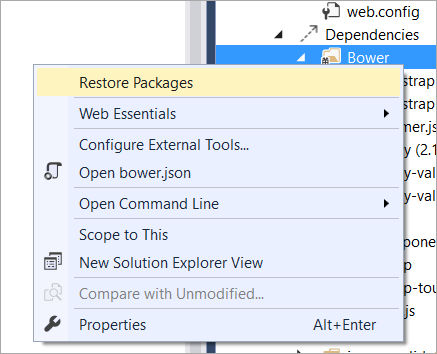

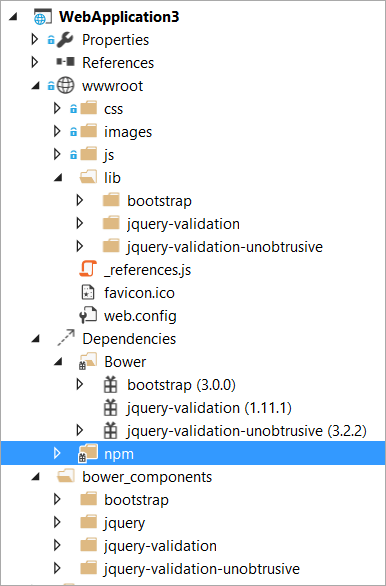

Update NuGet package view in Solution Explorer

In Solution Explorer we have updated the view under the References node for ASP.NET 5 projects. In previous releases, we used the blue NuGet icon for every NuGet package. We also used a generic icon for a project-to-project reference. In the image below you can see the improved view.

![]()

In the image above there are a few changes. The icon for NuGet package references has been updated to one that fits better with the other icons in Solution Explorer.

The icon for a project-to-project icon has been updated so that it’s clear that it’s a project-to-project reference. Project-to-project references will always be shown at the top of the list.

As well as icon updates we also show Framework Assemblies at the bottom of the reference list. This shows the assemblies that are available coming from the framework.

Open source templates

Lastly, our Visual Studio project templates for ASP.NET 5 and DNX are now open source on GitHub! The Templates repo contains the templates used in Visual Studio for ASP.NET 5 and DNX based projects (ASP.NET 4.6 templates are still managed separately). You can now contribute to the template code and provide feedback via the public Templates issue tracker.

Summary

This release includes a variety of new runtime features and tooling enhancements. Please download and try out ASP.NET 5 beta8 today and let us know what you think on GitHub. We hope you enjoy it!

![]()