Bugs Fixed in Visual Studio 2013 Update 3

Use a Microsoft Account to Create Web Apps Protected by Azure AD - With VS2013 Update 3

Back in December I have posted an article which described a limitation of the organizational identities support in the ASP.NET project templates in Visual Studio 2013.

In a nutshell. The ASP.NET project templates in Visual Studio 2013 have the ability to automatically create an entry for your application in Azure Active Directory – so that your project is created already configured to perform web sign on from the very start. That task requires a write operation in the Azure AD of choice, hence Visual Studio needs to acquire a valid token for performing it. To do so, it prompts you – the developer – for a valid account with administrative privileges in the target directory.

Azure AD admits both “native” directory users (often of the form

I am happy to announce that the problem has been resolved in VS 2013 Update 3! You can now use an MSA to configure your ASP.NET apps to use Azure AD for authentication. In this post I will show you how to take advantage of the feature. Rather than just giving you instructions, I will also give you some context about the directory assets that every Azure subscribers get out of the box.

Your Default Azure AD Tenant in Your Azure Subscription

Here there’s some background on we are going to work with.

Every Azure subscriber automatically gets a default azure AD tenant, that can be used for development purposes. The rules are slightly different between the case in which your subscription is backed by your organization and connected to your on-premises directory, and the case in which you subscribed as an individual using your MSA. In this post I will focus on the latter.

In fact: to make sure I’d describe the experience as faithfully as possible, for this tutorial I created a brand new MSA (testmsauser@outlook.com) and I used it to get a free trial subscription.

Done that, I headed to https://manage.windowsazure.com.

Of course I set up my new MSA with 2 factors authentication, hence the screen below. I am sure that all of you did that already ![]() but just in case, you can do it here.

but just in case, you can do it here.

Once you’re in, you’ll see that your subscription contains a default directory. Let’s click on that.

The default directory is a full blown Azure AD tenant, ready for you to develop against. Let’s take a look around: click on USERS form the top bar.

At creation time, the only user in the the Azure subscription’s administrator: in our case, testmsauser@outlook.com. It is in the global administrators role for the directory, which means that it can do pretty much anything.

From here you can add new users: they can be guest users (from MSA or other directories), or they can be native directory users, originating from this tenant. You’ll do that later in this tutorial.

Such users will

Azure created the default directory by deriving the domain name from the MSA of the administrator: in this case, our tenant is testmsauseroutlook.onmicrosoft.com. You can add custom domains by going through a verification step, but for our purposes the default domain will do just fine.

Before leaving the portal and switching to Visual Studio, let’s take a quick look at the APPLICATIONS tab.

As the subscription is brand new, there are no entries here yet.

In order to protect an application with Azure AD, Azure AD needs to know important details about the app: which URLs it will use, which identifiers will be exchanged at authentication time, and so on. You can let Azure AD know about your application by creating a new entry for it via the portal, that’s what the ADD button on the bottom bar does. However, as mentioned at the beginning of the post you can also provision an app entry directly via Visual Studio 2013. That’s pretty handy, given that Visual Studio can automatically provide application properties (like the app URL, which at development time will likely be the URL assigned by IIS Express) that you would otherwise have to type.

Creating an ASP.NET App Protected by Azure AD

Did you install the RTM of Update 3 for Visual Studio 2013? Excellent! Follow along the instructions below to create an Azure AD protected project.

Select new project, web. Pick ASP.NET Web application.

In this tutorial I’ll create an MVC app, but you can pick Web Forms or Web API just as well.

Clicking on Change Authentication you’ll land on the dialog below.

Here you have all the controls you need to tell VS to secure your app with Azure AD.

In the left hand side list, select Organizational Accounts. Leave all the defaults.

In Domain, enter the domain of your default directory (mine is testmsauseroutlook.onmicrosoft.com). Click OK.

In this phase, Visual Studio acquires a token from AD that will be used to write in the directory the new application’s details. To do so, it needs you to authenticate. From Update 3 on, you can use an MSA or a native directory user here. Given that in my test directory I have only my MSA, I’ll use that.

You are presented with the Azure AD authentication dialog. As soon as you type your MSA in the username field, you’ll be redirected to the MSA authentication page.

My user has MSA enabled, hence I’ll be prompted for a code even if here I am using a rich client (Visual Studio). Pretty handy!

Once VS successfully authenticated, all you need to do is hitting OK and your project will be generated.

Visual Studio add the app entry to the directory automatically. Want proof? Let’s go back to the portal, head back to the APPLICATIONS list and refresh the browser.

As you can see, there is now a new entry – with the same name as the VS project. Click on it, then select CONFIGURE.

If you scroll through that page, you’ll see that the app has been correctly provisioned by Visual Studio.

Creating a Test Directory User

We are not quite ready to test the app yet. The app entry in Azure AD is complete and fully functional: however the Azure AD protocol head it uses (WS-Federation) still suffers from the old restriction of accepting only native directory users. This restriction will be lifted soon (and it is already resolved for the OpenId Connect protocol head). In the meanwhile, if we want to test the app right now we need to create a new user in the directory. Luckily, it’s super simple.

Go to the USERS tab and hit ADD. Add any details you want until the Get temporary password screen.

Hit the create button.

Azure generates a temporary password for the new user. The user will be forced to change it at first login, so I usually perform a sign in immediately to get that task out of the way. Open an inPrivate browser, and navigate to myapps.onmicrosoft.com, the portal gathering all the apps approved by your admin to be used with AAD. (It does not really matter where you go at this point, as long as it is an AAD protected site. I like myapps because it’s easy to remember!)

You’ll be bounced to the Azure AD authentication page.

Enter your temporary password. You’ll be asked to enter a new password right away.

Once you’ve done that, Azure AD will make you test your new password by re-entering it.

Your new user has its new password. We are ready to give the app a spin.

Testing the Project

Let’s get back to Visual Studio. There are no changes required, all you need to do is simply hitting F5.

You’ll be prompted to authenticate, as expected. Enter the credentials of the directory user you just created.

And voila’! The new application signed in the directory user, as expected.

Summary

If you have feedback, there are multiple venues you can use to reach the team. Please use Connect to submit bugs, ASP.NET UserVoice to submit and vote for suggestions, and the ASP.NET Forums for Q&A.

We hope that this feature will help even more of you to take advantage of the great identity features that come with your Azure subscriptions!

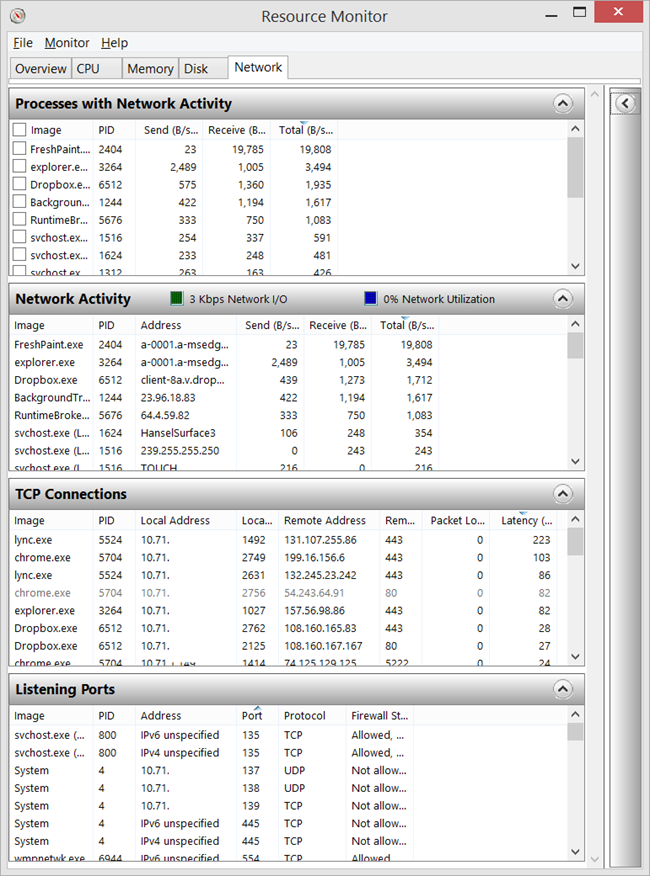

You aren't using Resource Monitor enough

Most folks learn how to use Task Manager pretty quickly. We've all been on the phone with non-technical-relative and ask them to open up Task Manager.

As we move from user to technical-user we are introduced to SysInternals tools and perhaps Process Monitor for finding out what's happening to a disk. However, I find that for quick questions that using Resource Monitor is faster to access and the information is easier to interpret.

You can bring Task Manager up, of course, by right clicking the Taskbar and clicking Task Manager. Or, hit Ctrl-Alt-Del then Task Manager.

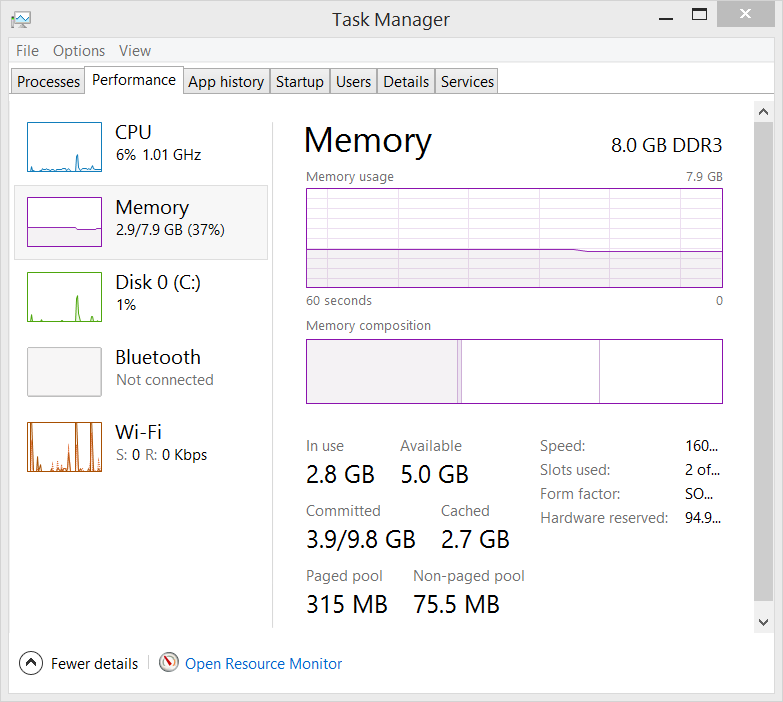

From Task Manager, you may never have noticed the Open Resource Monitor link at the bottom of the Performance tab.

Click that and open up a whole new insight into what's going on.

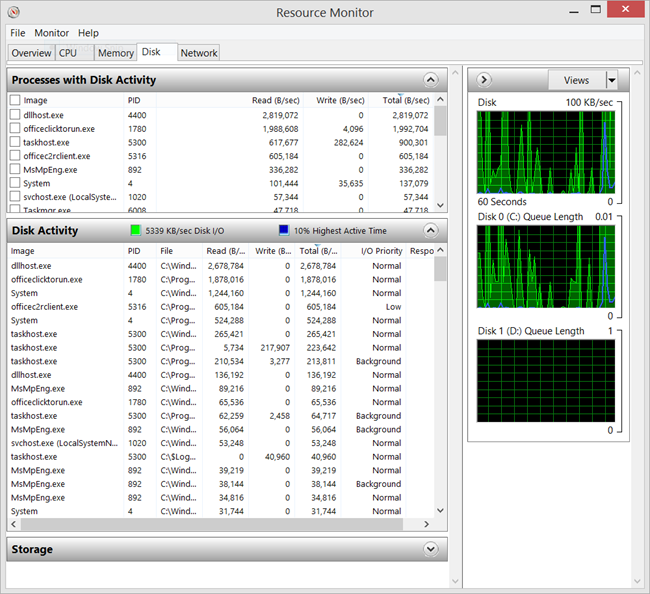

Disk

This is all great stuff but I find myself exploring the Disk tab the most.

Remember to sort by Read or Write bytes/sec. I often sort by Total and often find things like DropBox in there.

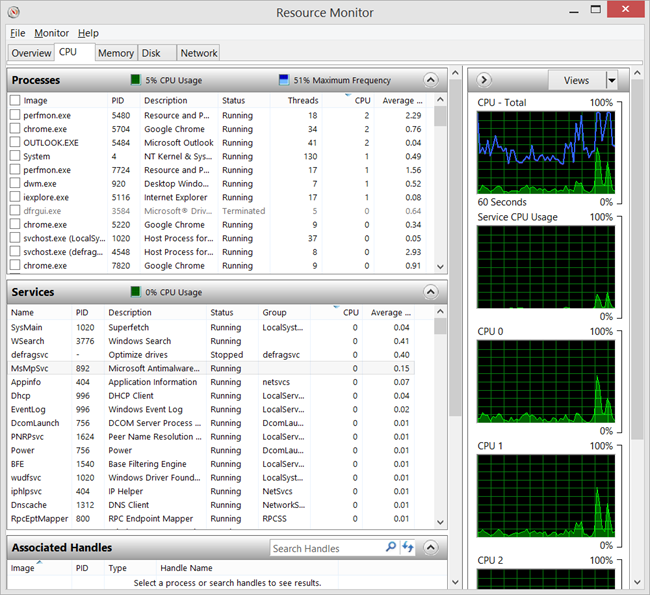

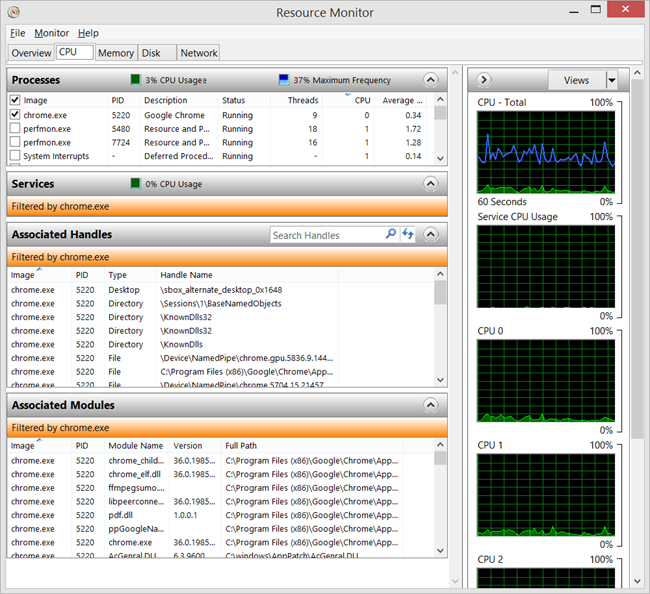

CPU and the CPU usage of Services

Task Manager is great but it doesn't easily show how much CPU is being used by a Service. Resource Monitor not only lets you easily Filter processes with a checkbox, but you can also sort services by CPU usage.

On the CPU tab, is an Associated Handles pane. If Resource Monitor is a well-kept secret, then Associated Handles is a secret within a secret. You can search across all processes for an open file name (or any handle), as well as filter by Process or Service.

Network Activity

The Network Activity tab is super useful and jam-packed with information. It makes it easy to find a process from a port or TCP connection.

You have this tool and all these views now, and I suspect you might not be using it to the fullest. Perhaps you pull from a number of smaller applets or shareware utilities to pull it all together.

Once I reminded myself that Resource Monitor could be launched directly from the Task Manager (an app that I have open often a lot already) I started using it even more. I may just pin it to the Taskbar!

© 2014 Scott Hanselman. All rights reserved.

Azure: Virtual Machine, Machine Learning, IoT Event Ingestion, Mobile, SQL, Redis, SDK Improvements

This past month we’ve released a number of great enhancements to Microsoft Azure. These include:

- Virtual Machines: Preview Portal Support as well as SharePoint Farm Creation

- Machine Learning: Public preview of the new Azure Machine Learning service

- Event Hub: Public preview of new Azure Event Ingestion Service

- Mobile Services: General Availability of .NET support, SignalR support

- Notification Hubs: Price Reductions and New Features

- SQL Database: New Geo-Restore, Geo-Replication and Auditing support

- Redis Cache: Larger Cache Sizes

- Storage: Support for Zone Redundant Storage

- SDK: Tons of great VS and SDK improvements

All of these improvements are now available to use immediately (note that some features are still in preview). Below are more details about them:

Virtual Machines: Support in the new Azure Preview portal

We previewed the new Azure Preview Portal at the //Build conference earlier this year. It brings together all of your Azure resources in a single management portal, and makes it easy to build cloud applications on the Azure platform using our new Azure Resource Manager (which enables you to manage multiple Azure resources as a single application). The initial preview of the portal supported Web Sites, SQL Databases, Storage, and Visual Studio Online resources.

This past month we’ve extended the preview portal to also now support Virtual Machines. You can create standalone VMs using the portal, or group multiple VMs (and PaaS services) together into a Resource Group and manage them as a single logical entity. You can use the preview portal to get deep insights into billing and monitoring of these resources, and customize the portal to view the data however you want. If you are an existing Azure customer you can start using the new portal today: http://portal.azure.com.

Below is a screen-shot of the new portal in action. The service dashboard showing service/region health can be seen in the top-left of the portal, along with billing data about my subscriptions – both make it really easy for you to see the health and usage of your services in Azure. In the screen-shot below I have a single VM running named “scottguvstest” – and clicking the tile for it displays a “blade” of additional details about it to the right – including integrated performance monitoring usage data:

The initial “blade” for a VM provides a summary view of common metrics about it. You can click any of the titles to get even more detailed information as well.

For example, below I’ve clicked the CPU monitoring title in my VM, which brought up a Metric blade with even more details about CPU utilization over the last few days. I’ve then clicked the “Add Alert” command within it to setup an automatic alert that will trigger (and send an email to me) any time the CPU of the VM goes above 95%:

In the screen-shot below, I’ve clicked the “Usage” tile within the VM blade, which displays details about the different VM sizes available – and what each VM size provides in terms of CPU, memory, disk IOPS and other capabilities. Changing the size of the VM being used is as simple as clicking another of the pricing tiles within the portal – no redeployment of the VM required:

SharePoint Farm support via the Azure Gallery

Built-into the Azure Preview Portal is a new “Azure Gallery” that provides an easy way to deploy a wide variety of VM images and online services. VM images in the Azure Gallery include Windows Server, SQL Server, SharePoint Server, Ubuntu, Oracle, Baracuda images.

Last month, we also enabled a new “SharePoint Server Farm” gallery item. It enables you to easily configure and deploy a highly-available SharePoint Server Farm consisting of multiple VM images (databases, web servers, domain controllers, etc) in only minutes. It provides the easiest way to create and configure SharePoint farms anywhere:

Over the next few months you’ll see even more items show up in the gallery – enabling a variety of additional new scenarios. Try out the ones in the gallery today by visiting the new Azure portal: http://portal.azure.com/

Machine Learning: Preview of new Machine Learning Service for Azure

Last month we delivered the public preview of our new Microsoft Azure Machine Learning service, a game changing service that enables your applications and systems to significantly improve your organization’s understanding across vast amounts of data. Azure Machine Learning (Azure ML) is a fully managed cloud service with no software to install, no hardware to manage, and no OS versions or development environments to grapple with. Armed with nothing but a browser, data scientists can log into Azure and start developing Machine Learning models from any location, and from any device.

ML Studio, an integrated development environment for Machine Learning, lets you set up experiments as simple data flow graphs, with an easy to use drag, drop and connect paradigm. Data scientists can use it to avoid programming a large number of common tasks, allowing them to focus on experiment design and iteration. A collection of best of breed algorithms developed by Microsoft Research comes built-in, as is support for custom R code– and over 350 open source R packages can be used securely within Azure ML today.

Azure ML also makes it simple to create production deployments at scale in the cloud. Pre-trained Machine Learning models can be incorporated into a scoring workflow and, with a few clicks, a new cloud-hosted REST API can be created.

Azure ML makes the incredible potential of Machine Learning accessible both to startups and large enterprises. Startups are now able to immediately apply machine learning to their applications. Larger enterprises are able to unleash the latent value in their big data to generate significantly more revenue and efficiencies. Above all, the speed of iteration and experimentation that is now possible will allow for rapid innovation and pave the way for intelligence in cloud-connected devices all around us.

Getting Started

Getting started with the Azure Machine Learning Service is easy. Within the current Azure Portal simply choose New->Data Services->Machine Learning to create your first ML service today:

Subscribe to the Machine Learning Team Blog to learn more about the Azure Machine Learning service. And visit our Azure Machine Learning documentation center to watch videos and explore tutorials on how to get started immediately.

Event Hub: Preview of new Azure Event Ingestion Service

Today’s connected world is defined by big data. Big data may originate from connected cars and thermostats that produce telemetry data every few minutes, application performance counters that generate events every second or mobile apps that capture telemetry for every user’s individual action. The rapid proliferation of connected devices raises challenges due to the variety of platforms and protocols involved. Connecting these disparate data sources while handling the scale of the aggregate stream is a significant challenge.

I’m happy to announce the public preview of a significant new Azure service: Event Hub. Event Hub is a highly scalable pub-sub ingestor capable of elastic scale to handle millions of events per second from millions of connected devices so that you can process and analyze the massive amounts of data produced by your connected devices and applications. With this new service, we now provide an easy way for you to provision capacity for ingesting events from a variety of sources, and over a variety of protocols in a secure manner. Event Hub supports a variety of partitioning modes to enable parallelism and scale in your downstream processing tier while preserving the order of events on a per device basis.

Creating an Event Hub

You can easily create a new instance of Event Hub from the Azure Management Portal by clicking New->App Services->Service Bus->Event Hub. During the Preview, Event Hub service is available in a limited number of regions (East US 2, West Europe, Southeast Asia) and requires that you first create a new Service Bus Namespace:

Learn More

Try out the new Event Hub service and give us your feedback! For more information, visit the links below:

Mobile Services: General Availability of .NET Support, SignalR and Offline Sync

A few months ago I announced a preview of Mobile Services with .NET backend support. Today I am excited to announce the general availability of the Mobile Services .NET offering, which makes it an incredibly attractive choice for developers building mobile facing backend APIs using .NET. Using Mobile Services you can now:

- Quickly add a fully featured backend to your iOS, Android, Windows, Windows Phone, HTML or cross-platform Xamarin, Sencha, or PhoneGap app, leveraging ASP.NET Web API, Mobile Services, and corresponding Mobile Services client SDKs.

- Publish any existing ASP.NET Web API to Azure and have Mobile Services monitor and manage your Web API controllers for you.

- Take advantage of built-in mobile capabilities like push notifications, real-time notifications with SignalR, enterprise sign-on with Azure Active Directory, social auth, offline data sync for occasionally connected scenarios. You can also take full advantage of Web API features like OData controllers, and 3rd party Web API-based frameworks like Breeze.

- Have your mobile app’s users login via AzureActive Directory and securely access enterprise assets such as SharePoint and Office 365. In addition, we've also enabled seamless connectivity to on-premises assets, so you can reach databases and web services that are not exposed to the Internet and behind your company’s firewall.

- Build, test, and debug your Mobile Services .NET backend using Visual Studio running locally on your machine or remotely in Azure.

You can learn more about Mobile Services .NET from this blog post, and the Mobile Services documentation center.

Real-time Push with Mobile Services and SignalR

We recently released an update to our Mobile Services .NET backend support which enables you to use ASP.NET SignalR for real-time, bi-directional communications with your mobile applications. SignalR will use WebSockets under the covers when it's available, and fallback to other “techniques” (i.e. HTTP hacks) when it isn't. Regardless of the mode, your application code stays the same.

The SignalR integration with Azure Mobile Services includes:

- Turnkey Web API Integration: Send messages to your connected SignalR applications from any Web API controller or scheduled job – we automatically give you access to SignalR Hubs from the ApiServices context.

- Unified Authentication: Protect your SignalR Hubs the same way you protect any of your Mobile Service Web API controllers using a simple AuthorizeLevel attribute.

- Automatic Scale-out: When scaling out your Azure Mobile Service using multiple front-ends, we automatically scale out SignalR using Azure Service Bus as the backplane for sync’ing between the front-ends. You don’t need to do anything to scale your SignalR Hubs.

Learn more about the SignalR capability in Mobile Services from Henrik’s blog.

Mobile Services Offline Sync support for Xamarin and native iOS apps

I've blogged earlier about the new Offline Sync feature in Mobile Services, which provides a lightweight, cross-platform way for applications to work with data even when they are offline / disconnected from the network. At that time we released Offline Sync support for Windows Phone and Windows Store apps.

Today we are also introducing a preview of Mobile Services Offline Sync for native iOS apps, as well as Xamarin.iOS, and Xamarin.Android.

Mobile Services Accelerators

I’m pleased to also introduce our new Mobile Services Accelerators, which are feature complete sample apps that demonstrate how to leverage the new enterprise features of the Mobile Services platform in an end-to-end scenario. We will have two accelerator apps for you today, available as a source code, as well as published in the app store.

- Field Engineer App (Windows app source, Xamarin iOS app source, Windows app in the store), an app for mobile workers needing to visit customer sites to fix an issue.

- Sales Assistant App (Windows app source, Windows app in the store), an app for store managers and sales assistants that facilitates typical operations on the store floor, as well as reporting.

These apps leverage the Mobile Services .NET backend support to handle authenticating employees with Azure Active Directory, store data securely, working with data offline, as well as get reminders via push notifications. We hope you will find these apps useful for your teams as a reference material. Stay tuned, as more accelerators are coming!

Notification Hubs: Price reductions and new features

The Azure Notification Hubs service enables large scale cross platform push notifications from any server backend running on-premise or in the cloud. It supports a variety of mobile devices including iOS, Android, Windows, Kindle Fire, and Nokia X. I am excited to announce several great updates to Azure Notification Hubs today:

- Price reduction. We are reducing the Notification Hubs price by up to 40x to accommodate a wider range of customer scenarios. With the new price (effective September 1st), customers can send 1 million mobile push notifications per month for free, and pay $1 per additional million pushes using our new Basic tier. Visit the Notification Hubs pricing page for more details.

- Scheduled Push. You can now use Notification Hubs to schedule individual and broadcast push notifications at certain times of the day. For example, you can use this feature to schedule announcements to be delivered in the morning to your customers. We include support to enable this no matter which time zone your customers are in.

- Bulk Registration management. You can now send bulk jobs to create, update or export millions of mobile device registrations at a time with a single API call. This is useful if you are moving from an old push notification system to Notification Hubs, or to import user segments from a 3rd party analytics system.

You can learn more about Azure Notification Hubs at the developer center.

SQL Databases: New Geo-Restore, Geo-Replication and Auditing support

In April 2014, we first previewed our new SQL Database service tiers: Basic, Standard, and Premium. Today, I’m excited to announce the addition of more features to the preview:

- Geo-restore: Designed for emergency data recovery when you need it most, geo-restore allows you to recover a database to any Azure region. Geo-restore uses geo-redundant Azure blob storage for automatic database backups and is available for Basic, Standard, and Premium databases in the Windows Azure Management Portal and REST APIs.

- Geo-replication: You can now configure your SQL Databases to use our built-in geo-replication support that enables you to setup an asynchronously replicated secondary SQL Database that can be failed over to in the event of disaster. Geo-replication is available for Standard and Premium databases, and can be configured via the Windows Azure Management portal and REST APIs. You can get more information about Azure SQL Database Business Continuity and geo-replication here and here.

- Auditing: Our new auditing capability tracks and logs events that occur in your database and provides dashboard views and reports that enables you to get insights into these events. You can use auditing to streamline compliance-related activities, gain knowledge about what is happening in your database, and to identify trends, discrepancies and anomalies. Audit events are also written to an audit log which is stored in a user-designated Azure storage account. Auditing is now available for all Basic, Standard, and Premium databases.

You can learn even more about these new features here.

Redis Cache: Large Cache Sizes, Six New Regions, Redis MaxMemory Policy Support

This past May, we launched the public preview of the new Azure Redis Cache service. This cache service gives you the ability to use a secure, dedicated Redis cache, managed as a service by Microsoft. Using the new Cache service, you get to leverage the rich feature set and ecosystem provided by Redis, and reliable hosting and monitoring from Microsoft.

Last month we updated the service with the following features:

- Support for larger cache sizes. We now support the following sizes: 250 MB, 1 GB, 2.5 GB, 6 GB, 13 GB and 26 GB.

- Support for six new Azure Regions. The full list of supported regions can be found in the Azure Regions page.

- Support for configuring Redis MaxMemory policy

For more information on the Azure Redis Cache, check out this blog post: Lap around Azure Redis Cache.

Storage: Support for Zone Redundant Storage

We are happy to introduce a new Azure Storage account offering: Zone Redundant Storage (ZRS).

ZRS replicates your data across 2 to 3 facilities either within a single Azure region or across two Azure regions. If your storage account has ZRS enabled, then your data is durable even in the case where one of the datacenter facilities hosting your data suffers a catastrophic issue. ZRS is also more cost efficient than the existing Global Redundant Storage (GRS) offering we have today.

You can create a ZRS storage account by simply choosing the ZRS option under the replication dropdown in the Azure Management Portal.

You can find more information on pricing for ZRS at http://azure.microsoft.com/en-us/pricing/details/storage/.

Azure SDK: WebSites, Mobile, Virtual Machines, Storage and Cloud Service Enhancements

Earlier today we released the Update 3 release of Visual Studio 2013 as well as the new Azure SDK 2.4 release. These updates contain a ton of great new features that make it even easier to build solutions in the cloud using Azure. Today’s updates include:

Visual Studio Update 3

- Websites: Publish WebJobs from Console or Web projects.

- Mobile Services: Create a Dev/Test environment in the cloud when creating Mobile Services projects. Use the Push Notification Wizard with .NET Mobile Services.

- Notification Hubs: View and manage device registrations.

Azure SDK 2.4

- Virtual Machines: Remote debug 32-bit Virtual Machines. Configure Virtual Machines, including installation & configuration of dynamic extensions (e.g. anti-malware, Puppet, Chef and custom script). Create Virtual Machine snapshots of the disk state.

- Storage: View Storage activity logs for diagnostics. Provision Read-Access Geo-redundant Storage from Visual Studio.

- Cloud Services: Emulator Express is the default option for new projects (Full Emulator is deprecated). Configure new networking capabilities in the service model.

You can learn all about the updates from the Azure team’s SDK announcement blog post.

Summary

This most recent release of Azure includes a bunch of great features that enable you to build even better cloud solutions. If you don’t already have a Azure account, you can sign-up for a free trial and start using all of the above features today. Then visit the Azure Developer Center to learn more about how to build apps with it.

Hope this helps,

Scott

P.S. In addition to blogging, I am also now using Twitter for quick updates and to share links. Follow me at: twitter.com/scottgu

Work-items tracking using Release Management

Tracing what is new in a given release for a product is often tiresome and time taking task. With Microsoft Release Management 2013 Update 3 RC/RTM, you can achieve this easily through its “Change Summary” feature. Details of this feature is described below

Change Summary feature details

Manual Release

When you start a new manual release from the Release Management client, it takes you to the Release page. Now, on this Release page, there is a new tab – Change Summary:

Click on the Change Summary tab and it shows you the list of work-items that are new or changed in the current release (in progress). This list is a relative list of work-items that are new / changed / implemented in the current release w.r.t. last successful release of the application:

You can also go to the already completed releases and you can see the Change Summary for each of these releases.

The Change Summary shows Id, Type, Title, Owner and State of the work-items. Should you want to look at the individual work-items in more detail, you can do that by clicking on the Id field's link which leads you to the work-item on TFS.

Note: Change summary shows the work-item differences per Stage. So if you are looking at change summary on a given stage, it compares work-items with last successful release on that stage only and shows all the new / changed work-items since last release till the current release (in progress) for that stage.

Automated release (Continuous deployment process)

Many a time releases are triggered not manually but as part of continuous deployment process setup or through command line utility. In such cases, a Release Manager or a project manager or a Stage owner would like to view the Change Summary of work-items before approving/rejecting a release or any step in a release. For this, he/she need not come to the Release Management client but can do so through the Release Explorer (web client).

The Release explorer, now, contains an additional link for Change Summary which shows the list of new / changed work-items:

On clicking this you can see the work-items diff in the same way as on the Release Management client:

Feature limitations

- This feature is only available in the vNext deployment pipeline. To know more about vNext deployments, you can refer these articles:

- Deploying using PowerShell Desired State Configuration in Release Management

- How to setup environments for Agent-less deployments in Release Management 2013 with Update 3 RC

- How to deploy to Standard or Azure environments in Release Management 2013 with Update 3 RC

- How to trigger deployments to Chef managed environments from Release Management 2013 with Update 3 RC

- Currently the feature works when you use TFVC as source control. If you use Git, this feature would not be available.

- This feature can provide work-items across multiple branches if the same is reflected through the Source directories listed on the build definition.

- Work-items from child branches with FI and RI into the working branch would not be available

- When you choose "Build Externally" with components, work-items are not available as this information is not available in the builds of these components.

Microsoft Remote Desktop Preview V8.1.3 update for Windows Phone 8.1 available today for download

My name is David Bélanger, and I’m a program manager on the Remote Desktop team working on Remote Desktop apps. Back in April, we released the first version of the Microsoft Remote Desktop Preview app for Windows Phone 8.1 and we’ve been adding new features ever since.

First, I want to say thank you for all the great feature requests you’ve added and voted for on our new uservoice site. Please keep the feedback coming as it will help us ensure we are building the app you want to use for your daily remote desktop tasks.

As we continue working on popular enterprise features like Gateway, RemoteApp and Desktop Connections and the new Microsoft Azure RemoteApp service due later this year, our updates will include a few other features. With that said, this blog highlights the changes we’ve made to the app which will be available from the Windows Phone Store later today.

Cortana integration

To get started with Cortana, the Windows Phone personal assistant, we’ve added voice commands allowing you to quickly connect to a desktop you’ve previously added by using the display name (the name that appears on the desktop thumbnail in the "desktops" page).

- Remote desktop connect to

or - Remote desktop to

For example, if your Connection Center looks like the one below, you could give Cortana the following commands:

- “Remote desktop connect to DAVID dash PC”

- “Remote desktop to my work PC”

Figure 1: Example of a Connection Center showing the display names.

Have some thoughts on cool ways we could integrate more deeply with Cortana? We’d love to see new suggestions on our feature request site.

Phone will remain unlocked while in a remote desktop session

Another change we made based on the feature requests we received is to prevent the phone from locking while in a remote desktop session. This will make it easier to monitor a PC over a long period of time.

Try it now

Whether you are the owner of a new Windows Phone 8.1 device or have upgraded your existing Windows Phone 8 device to the Developer Preview version, I encourage you to download the Remote Desktop Preview app from the Windows Phone store today and enjoy the rich Windows experience on your Windows Phone 8.1 device.

The Remote Desktop app is also available on your other devices (Windows 8.1, iOS, Mac OS X, and Android).

Stay tuned for future updates as we work on adding Microsoft Azure RemoteApp support and more enterprise-focused features including Remote Desktop Gateway, RD Connection Broker, and Remote Resources (RemoteApp and Desktop Connections) later this year.

Note: Questions and comments are welcome. However, please DO NOT post a request for troubleshooting by using the comment tool at the end of this post. Instead, post a new thread in the Remote Desktop clients forum. Thank you!

August updates for Windows Server 2012 R2

In today’s Windows Experience blog, we communicated our intent to deliver regular improvements to Windows in order to address feedback quickly while continuing to bring you an enterprise-class platform. We also provided a heads up that the next update for Windows happens on August 12.

On August 12, we will also release an update to Windows Server 2012 R2. In addition to regular security updates, this update will deliver bug fixes that will improve performance and reliability for your infrastructure. There are no changes to system APIs, your applications should “just work” without the need for re-certification or re-validation.

Similar to Windows 8.1, we will make these Windows Server 2012 R2 updates available for consumption starting August 12th through existing distribution mechanisms, including Windows Update and Windows Server Update Services.

August updates for Windows 8.1 and Windows Server 2012 R2

In April, we released a bigger, more comprehensive package of improvements to Windows 8.1 called the Windows 8.1 Update. We did the same for Server too, with Windows Server 2012 R2 Update. As we said at the time, our goal is to continue to deliver improvements to Windows through regular updates in order to respond more quickly to customer and partner feedback. After all, we already have a regular monthly update process that includes security and non-security updates.

With the above in mind, rather than waiting for months and bundling together a bunch of improvements into a larger update as we did for the Windows 8.1 Update, customers can expect that we’ll use our already existing monthly update process to deliver more frequent improvements along with the security updates normally provided as part of “Update Tuesday.” So despite rumors and speculation, we are not planning to deliver a Windows 8.1 “Update 2.”

We’ll continue to use our normal channels such as Windows Update (WU), Microsoft Update (MU), and Windows Server Update Services (WSUS) to deliver updates to Windows. These updates will include security updates to help keep you protected, as well as non-security updates that can bring a range of improvements to your PC or tablet running Windows. Examples of some of these non-security updates are the Windows Store Refresh in May and the June update to OneDrive to improve your control of sync. Some of these improvements might be more visible or even new features, while others might be more “behind-the-scenes” that improve things like the performance and reliability of your device. With these monthly updates, we continue to refine and improve Windows 8.1 in a more nimble way, creating a richer experience for all Windows customers.

Following are some of the new features and improvements included in the August 12th Update Tuesday:

- Precision touchpad improvements – three new end-user settings have been added: Leave touch pad on when a mouse is connected; allow right-clicks on the touchpad; double-tap and drag.

- Miracast Receive – exposes a set of Wi-Fi direct APIs for Independent Hardware Vendor (IHV) drivers or OEM drivers to develop Windows 32-bit applications that run on all supported x86-based or x64-based versions of Windows 8.1, enabling the computer as a Miracast receiver.

- Minimizing login prompts for SharePoint Online – reduces the number of prompts with federated use in accessing SharePoint Online sites. If you select the “Keep me signed in” check box when you log on for the first time, you will not see prompts for successive access to that SharePoint Online site.

This update will be delivered automatically via WU and optional through WSUS. Enterprises can take the update anytime. Further details on the update will be included here on August 12th.

For current Windows 8.1 consumers who have elected automatic updates, the rollout will be gradual to ensure all customers receive the update in a timely manner. Those who wish to install the update ahead of automatic updates may do so beginning August 12th manually via Windows Update.

As you might expect, we’re taking the same approach to deliver improvements to Windows Server 2012 R2 as well – go here for additional information.

Office 365 API tool for Visual Studio 2013 – summer update

Chakkaradeep Chandran is a program manager with Visual Studio.

Today, we are releasing an update to the Office 365 API Tool for Visual Studio 2013, which includes Office 365 API client libraries for .NET applications and JavaScript for multi-device hybrid applications. This update addresses some of the key feedback and bug fixes since the previous release.

Tooling updates

Updated flow

When you open the tool for the first time to add Office 365 APIs to your project, the Register your app link makes it explicit that you are registering a new application in Microsoft Azure Active Directory (AAD).

When you click Register your app, you are required to sign in with your Office 365 credentials. Once successfully signed in, the tool displays the available Office 365 services that you can add to your project.

Support for managing redirect URIs in ASP.NET web application projects

Previously, if you published your web application project to a web server for staging/testing, you would get an error that the URL is not recognized, and the app would fail to authenticate with AAD. With the latest tooling update, you can specify Redirect URIs directly in the tooling for your web application project, making it easy to add the URL of your testing site (though you should still create a separate AAD entry for pushing the app to production).

You can learn more about Redirect URIs by clicking the authentication response link in the tool or by reading the documentation here.

Status messages in Visual Studio output window

The tool now logs messages in the Visual Studio output window so you can see what changes are made once you add Office 365 services to your project.

Support for Windows Phone 8.1 projects in Visual Studio

With today’s update, you can now associate your Windows Phone 8.1 project with an Azure Active Directory application using the Office 365 API tool.

This will perform the following changes to your Windows Phone 8.1 project:

- Register an Azure Active Directory application for your Windows Phone project in Windows Azure.

- Add the following NuGet package to your project:

Microsoft Azure Active Directory Authentication Library (ADAL)

We are working on supporting the Office 365 API client libraries for Windows Phone 8.1 projects, but in the meantime, you can use the tool to register the Azure Active Directory application and configure the Office 365 services and permissions required for your Windows Phone project.

The Office 365 API client libraries section explains how to authenticate and call Office 365 APIs from your Windows Phone 8.1 projects.

Office 365 API client libraries

This update brings several changes and some new features to the Office 365 API client libraries.

Changes to Office 365 API authentication library

The previous release of Office 365 client libraries included the initial release of the Office 365 API authentication library, which was built on top of the Active Directory Authentication Library (ADAL). Based on your feedback we have improved the interoperability of the Office 365 API authentication library with ADAL to work well with other Azure-based services.

Note: Today’s update brings a breaking change to the code required to authenticate with Office 365 APIs. The Office 365 APIs and Tooling are still in preview, and we do try to avoid breaking changes. In this instance, because of the amount of feedback we got from developers, we thought the change was worth it before we formally release them.

For non-Xamarin-based applications, the new authentication flow is as follows (for Xamarin, continue to use the previously documented flow):

- Create the Office 365 Discovery Context.

DiscoveryContext discoveryContext = await DiscoveryContext.CreateAsync();

- Authenticate using Resource ID or Discovery Service.

Using Resource ID:

ResourceDiscoveryResult dcr =

await discoveryContext.DiscoverResourceAsync(ServiceResourceId);

Using Discovery Service:

CapabilityDiscoveryResult dcr =

await discoveryContext.DiscoverCapabilityAsync(“Contacts”);

- Create the respective client object and pass in a delegate to retrieve the access token.

Func

{

UserIdentifier userId = newUserIdentifier(dcr.UserId, UserIdentifierType.UniqueId);

string clientId = discoveryContext.AppIdentity.ClientId;

AuthenticationResult authResult = await discoveryContext.AuthenticationContext.AcquireTokenSilentAsync(ServiceResourceId, clientId, userId);

return authResult.AccessToken;

};

ExchangeClient exchangeClient = newExchangeClient(ServiceEndpointUri, getAccessToken);

Updates to multi-device hybrid Cordova applications

- The files installed by the Office 365 API tools are now organized in a much more intuitive folder structure.

![API tool for Visual Studio]()

- Support has also been added for SharePoint Files. In concert with SharePoint Files, support for the Office 365 Discovery service is provided, which helps to find the SharePoint service endpoints for the logged-in user. For example, the following code sample authenticates a user, discovers the user’s SharePoint service endpoint, and then retrieves the user’s files and folders from the SharePoint File service:

getCapability(capabilities: O365Discovery.ServiceCapability[],

capability: String): O365Discovery.ServiceCapability {

var result;

capabilities.forEach((serviceCapability, index, array) => {

if (serviceCapability.capability === capability) {

result = serviceCapability;

}

});

return result;

}

getFiles() {

var authContext = new O365Auth.Context();

var discoveryContext = new O365Discovery.Context();

discoveryContext.services(authContext.getAccessTokenFn(‘Microsoft.SharePoint’)).

Then (value => {

var capability = this.getCapability(value, ‘MyFiles’),

sharePoint = new MS.SharePointClient(capability.endpointUri,

authContext.getAccessTokenFn(capability.resourceId));

sharePoint.files.getFileSystemItems().fetch().then(

value => {

value.currentPage.forEach((fileOrFolder, index, array) => {

// process file or folder…

});

}, error => {

// process error…

});

}, error => {

// process error…

});

}

Windows store, Windows forms, web, and Xamarin apps

Based on your feedback, several bug fixes were made in client libraries. For Windows store, Windows forms, and Web apps, the authentication flow has been refactored to allow the developer to have better control over discovery and authentication results.

Windows Phone 8.1 apps

While our client libraries are not yet supported by Windows Phone 8.1 (we are working on it!), you can interact with the Office 365 service endpoints by using the Active Directory Authentication Library (ADAL). When you configure a Windows Phone 8.1 application to use Office 365 APIs, a reference to the ADAL will be added to your project. There is sample code that can be found on GitHub.com at Azure AD Windows Phone 8.1 sample (as an aside, we also offer many good Azure AD samples). In order to interact with the services at the http level, you must authenticate with Azure AD (using the sample for guidance), and when you have obtained an authorization token from ADAL, add it to the http headers using the following:

String accessToken = “The access token that ADAL provides.”;

HttpClient client = newHttpClient();

HttpRequestMessage request =

newHttpRequestMessage(HttpMethod.Get, new Uri(“https://outlook.office365.com/ews/odata/Me/Inbox“));

request.Headers.Authorization = newAuthenticationHeaderValue(“Bearer”, accessToken);

var response = await client.SendAsync(request);

Installing the latest update

To install the latest update:

- Go to Office 365 API Tools – Preview and click Download.

- Once the download is complete, open the .VSIX file to begin the setup.

Once you’ve updated the tool, invoke the Office 365 API tool by right-clicking your project in the Solution Explorer, select Add ->Connected Service.

For new projects, you will get the latest Office 365 API client libraries automatically by installing the latest tools. For existing projects using older versions of the libraries, you can manually update them as follows:

- In Visual Studio, right-click your project and then select Manage NuGet Packages.

- In the left pane, select Updates.

- In the Updates list, select Include Prerelease.

You should now see updates available for the Office 365 API Client Libraries. - For each Office 365 API Client Library, click Update to update the libraries to the latest version.

![API tool for Visual Studio]()

Have feature requests? Let us know on UserVoice.

Have questions? Connect with us on StackOverflow. Tag your questions with [Office365] & [API].

Be sure to also connect with the team on the Office 365 Developer Network on Yammer.

Don’t miss the Channel 9 video, Office 365 API Tools for Visual Studio – Building Office 365 Android Apps with Xamarin, with Microsoft’s Beth Massi (@BethMassi).

Your feedback about the tools and APIs is important to us.

—Chakkaradeep Chandran

The post Office 365 API tool for Visual Studio 2013 – summer update appeared first on Office Blogs.

Office 365 API Tools for Visual Studio– Building Office 365 Android Apps with Xamarin

The Office 365 APIs allow you to easily integrate Office 365 services into your apps in a consistent way. You can access user data like calendars, documents and more using REST APIs and standard OAuth flows from any platform. The Office 365 API Tools for Visual Studio make it super easy for developers to access the services via .NET or JavaScript client libraries. These tools are currently in preview.

Yesterday the team released a new preview! Download the latest version here: http://aka.ms/Office365ApiToolsPreview

And read about the exciting new features here, including Windows Phone 8.1 support: Office 365 API tool for Visual Studio 2013 – summer update

I've been meeting up with team members building these tools and have been watchingthem progress through this preview period. In this interview I once again sit down with Chakkaradeep Chandran (Chaks), a Program Manager on this project. This time we talk about working with Xamarin in Visual Studio and building an Android app that connects to your Office 365 contacts.

Watch: Office 365 API Tools for Visual Studio - Building Office 365 Android Apps with Xamarin

Also, there’s something funny if you watch to the end. We have an unexpected visitor walk into the interview which causes my “teacher face” to come out. Yes folks I do these interviews in conference rooms not professional studios so sometimes these things happen. What’s funny is the person that walks in on us works on Channel 9! Classic.

Have questions about the Office 365 API tools? Head to StackOverflow and tag your questions with [Office365] & [API]. Or connect with the team on the Office 365 Developer Network on Yammer.

Enjoy!

Support Tip: Running certain reports in the Configuration Manager console causes the console to crash

~ Eric Ellis

Hi everyone, Eric Ellis here with a quick support tip for you about how a bad report in System Center Configuration Manager 2007 can potentially cause the admin console to crash.

Hi everyone, Eric Ellis here with a quick support tip for you about how a bad report in System Center Configuration Manager 2007 can potentially cause the admin console to crash.

The last time I ran into this, the customer was using ConfigMgr 2007 R3 in their environment, and when they would run the "Compliance 1 - Overall Compliance" report in the ConfigMgr console it caused the console to crash. The same behavior didn’t happen with any of the other reports, nor did it occur when running the same report via the SQL Server Reporting Services URL.

The details of the console crash included the following:

An error occurred in ConfigMgr. The ConfigMgr console will continue to function, however you should save your work and restart the console. After restarting the console, verify that your last configurations have been applied.

A related SMSAdminUI.log contained the following entry:

The report definition is not valid or supported by this version of Reporting Services. This could be the result of publishing a report definition of a later version of Reporting Services, or that the report definition contains XML that is not well-formed or the XML is not valid based on the Report Definition schema. Details: The element 'TablixMembers' in namespace 'http://schemas.microsoft.com/sqlserver/reporting/2010/01/reportdefinition' has incomplete content. List of possible elements expected: 'TablixMember' in namespace 'http://schemas.microsoft.com/sqlserver/reporting/2010/01/reportdefinition'.

What we ultimately discovered was that the default report had been edited in a later version of SSRS Report Builder than is supported by ConfigMgr 2007. This introduced schema differences to the RDL file, thus causing the console to crash.

The Configuration Manager product team touches on this issue here:

FAQ - SQL Reporting Services Integration with System Center Configuration Manager 2007 R2

The article linked above states the following:

“Partial support for authoring and running reports in a SQL Server 2008 environment

- The Configuration Manager 2007 R2 release uses the SQL Reporting Services 2005 report schema underneath and hence reports authored using Business Development Studio 2008 (as bundled with SQL Server 2008) and Report Builder 2.0 will not run from the Configuration Manager 2007 R2 administration console. However you can author reports using any of the tools available with SQL Reporting Services 2008 and can run these reports from the SQL Reporting Services Web UI directly.”

If you’re unsure whether this is your issue, you can easily tell by looking at the RDL file itself. For example, here’s the relevant snippet from a correct, working RDL file:

Here’s an example of one edited using a later version:

So with this in mind, that gives us three possible ways to go forward:

Option 1: View the reports in question via the SQL Server Reporting Services Report Manager.

Option 2: Replace the modified copy of the report in question using the following steps:

a. Obtain an unmodified/default copy of the report file (*.RDL).

b. Open SSRS Report Manager and navigate to the location of the report.

c. Make a copy of the report by clicking the pull down menu associated to this report, clicking on the “Download” option, and saving the copy to a desired location, as shown below.

d. Overwrite the existing copy of the report in SSRS Report Manager:

- Open SSRS Report Manager and navigate to the location of the report.

- Click on the pull down menu associated with the report and click on the “Manage” option.

- In the report Properties window, click the “Replace” button.

- Click the Browse button, navigate to the location of the unmodified/default copy of the report file, click OK, and Apply.

- Click the “Home” link to return to the home page of the Report Manager.

- Browse to the location of the report in the Report Manager, run the report, and ensure that it functions properly.

- If running the report in Report Manager is successful, run the report from within the ConfigMgr 2007 console to ensure that the report is rendered without crashing.

Option 3: If the edits made to the report are required, and you need to be able to open the modified reports in the ConfigMgr 2007 console, modifications to the report must be done within the SSRS 2005 edition of Report Builder. Once this has been done, upload the SSRS 2005 version of the report into the SSRS 2008R2 Report Manager and override the previous version of the report using steps similar to those in Option 2 above.

Eric Ellis| Senior Support Escalation Engineer | Microsoft GBS Management and Security Division

Get the latest System Center news onFacebookandTwitter:

System Center All Up: http://blogs.technet.com/b/systemcenter/

System Center – Configuration Manager Support Team blog: http://blogs.technet.com/configurationmgr/

System Center – Data Protection Manager Team blog: http://blogs.technet.com/dpm/

System Center – Orchestrator Support Team blog: http://blogs.technet.com/b/orchestrator/

System Center – Operations Manager Team blog: http://blogs.technet.com/momteam/

System Center – Service Manager Team blog: http://blogs.technet.com/b/servicemanager

System Center – Virtual Machine Manager Team blog: http://blogs.technet.com/scvmm

Windows Intune: http://blogs.technet.com/b/windowsintune/

WSUS Support Team blog: http://blogs.technet.com/sus/

The AD RMS blog: http://blogs.technet.com/b/rmssupp/

MED-V Team blog: http://blogs.technet.com/medv/

Server App-V Team blog: http://blogs.technet.com/b/serverappv

The Forefront Endpoint Protection blog : http://blogs.technet.com/b/clientsecurity/

The Forefront Identity Manager blog : http://blogs.msdn.com/b/ms-identity-support/

The Forefront TMG blog: http://blogs.technet.com/b/isablog/

The Forefront UAG blog: http://blogs.technet.com/b/edgeaccessblog/

Windows Phone folders: A new way to organize your Start screen

There’s no right or wrong way to arrange your Tiles on Start—there’s only your way. And now, with folders in Windows Phone 8.1 Update, you can make your Start screen more you-ier than ever before. If it’s a Tile on Start, you can add it to a folder. Just push one Tile over another one to make it happen.

Folders let you group apps, people, websites, and anything else that can be pinned to Start. Below, we (Windows Phone writers Dylan and Matt) provide examples of how we use folders under the guise of doing “research” for work.

Find out when you can get Windows Phone 8.1 Update (including folders) on your phone here.

Make your voyage extra bon (Dylan’s vacation folder)

The most stressful part of any big trip I take is getting there. That includes packing, getting to the airport, and freaking out when I can’t find my passport—only to realize it’s been in my back pocket all along.

But the next time I go on vacation, I’ll be prepared with a travel folder on my Windows Phone Start screen chock full of stress-reducing and good time-enhancing apps, maps, and more. I can see the folder now…

- Packing list. I can keep my packing list OneNote note in my travel folder. This way I have it handy when I shop for my trip and I don’t leave anything critical behind.

- Weather. I don’t want to be stuck in t-shirt territory when the forecast calls for sweater weather. A Tile for my destination from the Bing Weather app should do the trick.

- Airline app. No check-in counter for this efficient flyer: I plan to keep the airline’s app in my folder so I can get to my digital boarding pass when I need it.

- Restaurants/places. After enduring airplane food, I’m always ready for something fresh and local upon my arrival. A few recommended restaurants in my travel folder will make it easy to find good eatin’.

- Directions. Deciding where to eat is only half the battle—I’ll still need to find the place. From the Maps app, I can pin a few restaurant locations to Start, then add them to my folder. (Tip within a tip: Download a map before going to a foreign country to avoid using data.)

Boy, Hypothetical Future Me sure is a savvy traveler!

The thrill of victory and the (frequent) agony of defeat (Matt’s sports folder)

I’ve looked for ways to keep sports from taking over my Start screen. A Tile here, a Tile there, and before you know it, Tiles for sports everywhere. As a long-suffering Cleveland sports fan, it’s not always good news on those Live Tiles either, so I might as well keep all my sports-related misery in one place. Here are some things I have in my sports folder:

- Scores. I made the Cleveland Indians one of my interests in Cortana’s Notebook. After pinning that interest to Start and putting it in my folder, I get the score for today’s game right on the Live Tile. If I want more detailed info and stats about the game, I just tap the Tile to get it.

- Fantasy teams. Keeping track of my fantasy baseball team is easier now. I just go into my sports folder and tap the Tile for my team to see how they’re doing. (Nowadays, the answer is usually “Not so good.”) But fantasy football season is right around the corner, so I’ll have more fantasy teams to check on soon enough. Maybe the news will be a little better.

- Sports radio. On the way to work in the morning, I happily settle into my seat on the bus knowing that I’m just a tap or two from sports radio bliss and the latest sports news.

- Sports news apps. And of course, I have a bunch of sports apps (ESPN Hub, Bing Sports, and Bleacher Report to name a few—I know, I’m a bit fanatical) to help me keep up on all the latest sports news throughout the day.

Unfold-er the possibilities

Travel and sports are of course just a few of the endless number of things you can put in folders to keep your Start screen looking nice and clean. Here are a few more quick ideas we had to get you started:

- Projects at work or school. Put all your Word docs, PowerPoint slides, OneNote notes, and other things in a folder to keep projects organized and on track.

- Social networking. Put your social networking apps in one place to stay on top of what’s going on.

- People. Create a Brady Bunch-esque folder of your friends and family for easy access. Text, email, or call them in a couple of taps.

- Music and videos. Add albums, artists, websites, and whatever else, so your favorite music and videos are at your fingertips.

- Cooking. Add your favorite recipes to a folder, toss in a dash of your favorite food blogs, and then stir in your grocery list from OneNote. You’ll be cooking in no time.

What creative folder ideas can you come up with?

- Dylan Romero and Matt Lichtenberg

August updates for Windows Server 2012 R2

In today’s Windows Experience blog, we communicated our intent to deliver regular improvements to Windows in order to address feedback quickly while continuing to bring you an enterprise-class platform. We also provided a heads up that the next update for Windows happens on August 12.

On August 12, we will also release an update to Windows Server 2012 R2. In addition to regular security updates, this update will deliver bug fixes that will improve performance and reliability for your infrastructure. There are no changes to system APIs, your applications should “just work” without the need for re-certification or re-validation.

Similar to Windows 8.1, we will make these Windows Server 2012 R2 updates available for consumption starting August 12th through existing distribution mechanisms, including Windows Update and Windows Server Update Services.

MultiDictionary becomes MultiValueDictionary

We just shipped an update to our experimental implementation of a multi value dictionary. In this post, our software developer intern Ian Hays talks about the changes. -- Immo

Goodbye MultiDictionary

In my last post I went over MultiDictionary, officially available on NuGet as the prerelease package Microsoft.Experimental.Collections. We received great feedback, questions and commentary in the comments, and it was clear that this was something that a lot of you felt passionately about (70 comments? Awesome!). We’ve read all of your comments and taken them into consideration for this next iteration of Microsoft.Experimental.Collections.

You should also check out our interview on Channel 9:

Hello MultiValueDictionary

First off, let’s talk about the name. It was a bit ambiguous what the “Multi” in “MultiDictionary” referred to: at first glance, “multi” could mean there were multiple keys per value, or a dictionary of dictionaries, or that it was a bi-directional dictionary. To make it explicit and leave room for other variants in the future, we’ve renamed the type to MultiValueDictionary to clarify that the type allows multiple values for a single key.

Let’s get right to the meat of the post: what’s changed? We’ll go into some of the major design decisions and changes that make up the new MultiValueDictionary in the next sections.

IEnumerable of…?

MultiDictionary could be thought of as Dictionary where we could have multiple elements with the same TKey. MultiValueDictionary is more akin to a Dictionary with a number of methods to enable easy modification of the internal IReadOnlyCollections. This distinction may seem subtle, but it affects how you consume the data structure.

For example, let’s look at the Count and Values properties. MultiDictionary would return the number of values and a collection of values, while MultiValueDictionary returns the number of keys and a collection of IReadOnlyCollections of values.

// MultiDictionary

var multiDictionary = new MultiDictionary();

multiDictionary.Add("key", 1);

multiDictionary.Add("key", 2);

//multiDictionary.Count == 2

//multiDictionary.Values contains elements [1,2]

// MultiValueDictionary

var multiValueDictionary = new MultiValueDictionary();

multiValueDictionary.Add("key", 1);

multiValueDictionary.Add("key", 2);

//multiValueDictionary.Count == 1

//multiValueDictionary.Values contains elements [[1,2]] This behavioral change also affects the enumerator in the same way that it affects the Values property. Previously the dictionary was flattened when enumerating, as it implemented IEnumerable. MultiValueDictionary now implements IEnumerable.

var multiValueDictionary = new MultiValueDictionary();

multiValueDictionary.Add("key", 1);

multiValueDictionary.Add("key", 2);

multiValueDictionary.Add("anotherKey", 3);

foreach (KeyValuePair> key in multiValueDictionary)

{

foreach (int value in key.Value)

{

Console.WriteLine("{0}, {1}", key.Key, value);

}

}

// key, 1

// key, 2

// anotherKey, 3 As Sinix pointed out in the previous blog post comments, this is very similar to another type in the .NET Framework, ILookup. MultiValueDictionary shouldn’t implement both the dictionary and lookup interfaces, because that would cause it through interface inheritance to implement two different versions of IEnumerable: IEnumerable and IEnumerable. It wouldn’t be clear which version you would get when using foreach. But since MultiValueDictionary logically implements the concept, we’ve added a method AsLookup() to MultiValueDictionary which returns an implementation of the ILookup interface.

var multiValueDictionary = new MultiValueDictionary();

multiValueDictionary.Add("key", 1);

multiValueDictionary.Add("key", 2);

multiValueDictionary.Add("anotherKey", 3);

var lookup = multiValueDictionary.AsLookup();

foreach (IGrouping group in lookup)

{

foreach (int value in group)

{

Console.WriteLine("{0}, {1}", group.Key, value);

}

}

// key, 1

// key, 2

// anotherKey, 3 Indexing and TryGetValue

In the first iteration of the MultiDictionary we followed the precedent from Linq’s AsLookup() with regards to the way the indexation into the MultiDictionary worked. In a regular Dictionary, if you attempt to index into a key that isn’t present you’ll get a KeyNotFoundException, but like AsLookup(), the MultiDictionary returned an empty list instead. This was mostly to match the functionality of the Lookup class that is conceptually similar to the MultiDictionary, but also because this behavior was more practically applicable to the kinds of things you’d be using the MultiDictionary.

With the behavior changes brought on by the MultiValueDictionary and the addition of the AsLookup() method, this old functionality doesn’t quite fit anymore. We heard feedback that this inconsistency between MultiDictionary and Dictionary was confusing, so the MultiValueDictionary will now throw a KeyNotFoundException when indexing on a key that isn’t present. We’ve also added a TryGetValue method to accommodate the new behavior.

var multiValueDictionary = new MultiValueDictionary();

multiValueDictionary.Add("key", 1);

//multiValueDictionary["notkey"] throws a KeyNotFoundException

IReadOnlyCollection collection = multiValueDictionary["key"];

multiValueDictionary.Add("key", 2);

//collection contains values [1,2] Another related change with the MultiValueDictionary on the topic of the indexer is the return value. Previously we returned a mutable ICollection. Adding and removing values from the returned ICollection updated the MultiDictionary. While there are uses for this functionality, it can be unexpected and create unintentional coupling between parts of an application. To address this we’ve changed the return type to IReadOnlyCollection. The read-only collection will still update with changes to the MultiValueDictionary.

When a Listjust doesn’t cut it

One limitation of the MultiDictionary was that internally, it used a Dictionary and there was no way to change the inner collection type. With the MultiValueDictionary we’ve added the ability to specify your own inner collection.

Showing a simple example of how they work is probably easier than trying to describe them first, so let’s do that.

var multiValueDictionary = MultiValueDictionary.Create>();

multiValueDictionary.Add("key", 1);

multiValueDictionary.Add("key", 1);

//multiDictionary["key"].Count == 1 Above, we replace the default List with a HashSet. As the examples show, HashSet combines duplicate TValues.

For every constructor there is a parallel generic static Create method that takes the same parameters but allows specification of the interior collection type. It’s important to point out that this doesn’t affect the return value of the indexer/TryValueValue though (they return very limited IReadOnlyCollections regardless of the inner collection type).

If you want a little bit more control over how your custom collection is instantiated, there are also the more specific Create methods that allow you to pass a delegate to specify the inner collection type:

var multiValueDictionary = MultiValueDictionary.Create>(myHashSetFactory);

multiValueDictionary.Add("key", 1);

multiValueDictionary.Add("key", 1);

//multiValueDictionary["key"].Count == 1 In either case, the specified collection type must implement ICollection and must not have IsReadOnly set to true by default.

And that’s all!

You can download the new MultiValueDictionary from NuGet and try it out for yourself! If you have any questions or if you just want to give feedback, please leave a comment or contact us.

Auditing in Azure SQL Database

I'm very excited to share the hard work some of my peers have been doing - Auditing in Azure SQL Database. After focusing on a bunch of behind the scenes security improvements, it's good to get something customer facing. Check out http://azure.microsoft.com/en-us/documentation/articles/sql-database-auditing-get-started/ and this video - http://channel9.msdn.com/Shows/Data-Exposed/Auditing-in-Azure-SQL-Database. It's a great start to what I hope are many customer facing features.

Announcing RTM of ASP.NET Identity 2.1.0

We are releasing RTM of ASP.NET Identity 2.1.0. The main focus in this release was to fix bugs and add SignInManager to make it easier to use security features such as Account Lockout, Two-Factor Authentication for login.

ASP.NET Identity 2.1 is included in the ASP.NET templates which were released with VS 2013 Update 3. The templates have been updated to include Two-Factor Authentication.

Download this release

You can download ASP.NET Identity from the NuGet gallery. You can install or update these packages using the NuGet Package Manager Console, like this:

Install-Package Microsoft.AspNet.Identity.EntityFramework–Version 2.1.0

Install-Package Microsoft.AspNet.Identity.Core -Version 2.1.0

Install-Package Microsoft.AspNet.Identity.OWIN -Version 2.1.0

What’s in this release?

Following is the list of features and major issues that were fixed in 2.1.0.

SignInManager

SignInManager makes it easier to add Two-Factor authentication, account lockout and other features when you login.

SignInManager was in the samples package in ASP.NET Identity 2.0. In version 2.1, we have added it in the framework

The following tutorials cover account confirmation and Two-Factor Authentication (including account lockout and protecting against brute force attacks against the two factor authentication codes)

Following show the login code of an application which uses two-factor authentication and account lockout.

List of bugs fixed

You can look at all the bugs that were fixed in this release by clicking here.

Enable Two-Factor Authentication in ASP.NET templates using VS 2013 Update 3

The following steps summarize the experience of enabling Two-Factor Authentication using SMS in ASP.NET MVC. You can follow the same steps for other templates such as Web Forms as well. You can also write your own Two-Factor authentication provider (such as using QR codes using authenticator apps) and plug it in.

For a more detailed walkthrough of the code and the concepts refer to the following article http://www.asp.net/identity/overview/features-api/two-factor-authentication-using-sms-and-email-with-aspnet-identity

- Create ASP.NET MVC Template and select Authentication using Individual User Accounts

- Open Views\Manage\Index.cshtml and “Add Phone Number option” by uncommenting the following lines Code Snippet

- <dt>Phone Number:dt>

- <dd>

- @(Model.PhoneNumber ?? "None") [

- @if (Model.PhoneNumber != null)

- {

- @Html.ActionLink("Change", "AddPhoneNumber")

- @: |

- @Html.ActionLink("Remove", "RemovePhoneNumber")

- }

- else

- {

- @Html.ActionLink("Add", "AddPhoneNumber")

- }

- ]

- dd>

- Open Views\Manage\Index.cshtml and enable“Two-Factor Authentication”

- Code Snippet

- <dt>Two-Factor Authentication:dt>

- <dd>

- @if (Model.TwoFactor)

- {

- using (Html.BeginForm("DisableTwoFactorAuthentication", "Manage", FormMethod.Post, new { @class = "form-horizontal", role = "form" }))

- {

- @Html.AntiForgeryToken()

-

Enabled - <inputtype="submit"value="Disable"class="btn btn-link"/>

- }

- }

- else

- {

- using (Html.BeginForm("EnableTwoFactorAuthentication", "Manage", FormMethod.Post, new { @class = "form-horizontal", role = "form" }))

- {

- @Html.AntiForgeryToken()

-

Disabled - <inputtype="submit"value="Enable"class="btn btn-link"/>

- }

- }

- dd>

- Register a SMS provider in App_Start\IdentityConfig.cs

- You can use any SMS services such as Twilio or others. For more information on using Twilio see this post. Your SMS service should look like as follows.

Code Snippet

- publicclassSmsService : IIdentityMessageService

- {

- publicTask SendAsync(IdentityMessage message)

- {

- var Twilio = newTwilioRestClient(Keys.TwilioSid, Keys.TwilioToken);

- var result = Twilio.SendMessage(Keys.FromPhone, message.Destination, message.Body);

- // Twilio doesn't currently have an async API, so return success.

- returnTask.FromResult(0);

- }

- }

- Register a user

- Browse to the site and register as a new user.

- Go to Manage page: Click your username after logging in and go to Manage page. Here is what the Manage page would look like once you login

- Add a Phone Number and verify it

- You need to add a verified phone number before you can use it to get text messages for two-factor authentication.

- Enable Two-Factor Authentication

- Click on the “Two-Factor Authentication” to enable it.

- Logout and Login using username and password

- When you login you will be prompted to select the Two-Factor authentication provider to use to get the code. You can choose between Email, SMS and you can also write your own Two-Factor authentication providers such as QR code generators using Authenticator apps.

- Get the code you got through SMS and verify it

- Check the Remember the Two-Factor Authentication Option

- Clicking on the this checkbox will exempt you from needing to use 2FA to log on with that computer and browser. You can do this on any private machine you regularly use. By setting Remember this browser, you get the added security of 2FA from computers you don't regularly use, and you get the convenience on not having to go through 2FA on your own computers.

- Account Lockout

- If you enter the code incorrectly then the account will be locked out for 5min after 5 incorrect tries. You will be able to login after 5 min without requiring an Admin action.

- Account Lockout feature in ASP.NET Identity can be configured inside the ApplicationUserManager in App_Start\IdentityConfig.cs as follows.

- Code Snippet

- // Configure user lockout defaults

- manager.UserLockoutEnabledByDefault = true;

- manager.DefaultAccountLockoutTimeSpan = TimeSpan.FromMinutes(5);

- manager.MaxFailedAccessAttemptsBeforeLockout = 5;

- When you enter the two-factor verification code, the VerifyCode action uses the SignInManager to complete the two-factor SignIn process and protects for brute force attacks against the two factor codes. Code Snippet

- //

- // POST: /Account/VerifyCode

- [HttpPost]

- [AllowAnonymous]

- [ValidateAntiForgeryToken]

- publicasyncTask<ActionResult> VerifyCode(VerifyCodeViewModel model)

- {

- if (!ModelState.IsValid)

- {

- return View(model);

- }